In general, what is a secular person, an atheist even, supposed to tell a religious person, or anyone really, in lieu of ‘I’ll pray for you’?

Author Archives: Aardvark

Is Academic Chauvinism As Dangerous As Climate Disruption Denial

The following appeared in my hometown paper today.

I wondered what all of you might have to say about it.

I typed it all out but please remember the title was created by the editor not the letter writer.

Not all ‘science’ is equal

EDITOR: The word science has been bastardized. Its common usage does not distinguish between the hard, soft and historical sciences.

Among the hard sciences, namely physics, chemistry and some aspects of biology, e.g., microbiology, genetics, etc., one relies on experiments that generate mathematical theories that make definite predictions that can be experimentally verified, and thus to theories that can definitely be falsified. The hard sciences deal with only the physical aspect of nature, where purely physical devices can be used to collect relevant data.

Among the soft sciences — social sciences, psychology, etc., — studies are rarely based on mathematical descriptions, and so definite predictions are elusive and pregnant with complicated assumptions. Here one is dealing with humans, and as such with essentially the nonphysical, e.g., issues of the human mind, and the supernatural aspects of nature, owing to humans being spiritual beings.

Finally, among the historical sciences, such as evolutionary theory, climate change, etc., studies are more akin to forensic science, where extant data is used to extrapolate and make tentative predictions. There is no single, well-defined theory that makes predictions that can be experimentally tested, and thus falsify the theory. Here one is certainly dealing with the whole of reality — the physical/nonphysical/supernatural aspect of nature.

It was written by a PhD. of Physics and my personal take is that it represents professional chauvinism.

As a side note the author is also a creationist though I don’t know if an Old or Young Earth creationist.

ID proponents: is Chance a Cause?

- If yes, in what sense?

- If no, do you think that evolutionists claim that it is?

- If yes, why do you think this?

- If no, what the heck are we arguing about?

Chance

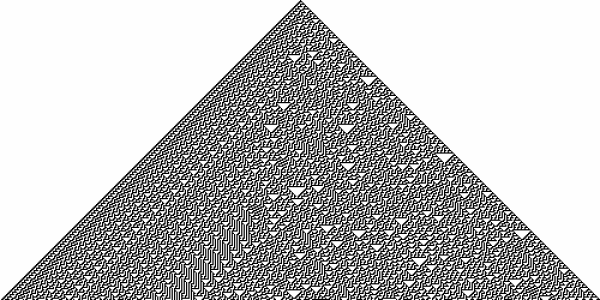

Is the trajectory here “due to chance”?

Proof: Why naturalist science can be no threat to faith in God

I’m going to demonstrate this using Bayes’ Rule. I will represent the hypothesis that (a non-Deist, i.e. an interventionist) God exists as ![]() , and the evidence of complex life as

, and the evidence of complex life as ![]() . What we want to know is the posterior probability that

. What we want to know is the posterior probability that ![]() is true, given

is true, given ![]() , written

, written

![]()

which, in English, is: the probability that God exists, given the evidence before us of complex life.

Moral Behavior Without Principled Intent

Addendum: The original title of this post was “An Evolutionary Antecedent of Morality?”. In the comments Petrushka pointed out the difficulties of this phrase and I have given it a better title.

In the comments of an old post I linked to the story of a Bonobo chimp named Kanzi who is the research subject of a project called The Great Ape Trust. Since then I have been mentally groping for, what was, an amorphous concept I needed to concretize in order to turn that comment into an OP. Petrushka has helpfully formalized that concept with his very own neologism, enabling me to write this.

Society, Morality, and Rape

Brent, at Uncommon Descent, asked:

Is rape morally wrong because society says so?

Or:

Does society say rape is wrong because morality says so?

Theistic morality is subjective

Claiming that morality is what an objectively real deity objectively commands is all very well, but without a way of knowing which deity is objectively real, it gets us no further forrarder.

Could a theist who claims that theistic morality is objective explain how we can objectively discern which theistic morality is the objective one?

Englishman in Istanbul proposes a thought experiment

… I wonder if I could interest you in a little thought experiment, in the form of four simple questions:

1. Is it possible that we could discover an artifact on Mars that would prove the existence of extraterrestrials, without the presence or remains of the extraterrestrials themselves?

2. If yes, exactly what kind of artifact would suffice? Car? House? Writing? Complex device? Take your pick.

3. Explain rationally why the existence of this artifact would convince you of the existence of extraterrestrials.

4. Would that explanation be scientifically sound?

I would assert the following:

a. If you answer “Yes” to Question 4, then to deny ID is valid scientific methodology is nothing short of doublethink. You are saying that a rule that holds on Mars does not hold on Earth. How can that be right?

b. If you can answer Question 3 while answering “No” to Question 4, then you are admitting that methodological naturalism/materialism is not always a reliable source of truth.

c. If you support the idea that methodological naturalism/materialism is equivalent to rational thought, then you are obligated to answer “No” to Question 1.

Well, I can never resist a thought experiment, and this one seems quite enlightening….

Eppur si muove

Cornelius Hunter has a particularly odd post up, called: More Warfare Thesis Lies, This Time From CNN. He takes issue with a report by Florence Davey-Attlee, on Vatican seeks to rebrand its relationship with science. His complaint is that it promotes what he calls “the false Warfare Thesis, which pits religion against science” and “is too powerful and alluring to allow the truth to get in the way”. He writes:

The key to a good lie is to leverage the truth as much as possible. In this instance, we have two truths juxtaposed to make a lie. You see Bruno did argue for an infinite universe, and he was burned at the stake. But those are two distinct and separate facts. The implication is that the Church burned Bruno at the stake because of his scientific investigations about the universe—a perfect example of the Warfare Thesis.

Response to Kairosfocus

[23rd May, 2013 As Kairosfocus continues to reiterate his objections to the views I express in this post, I am taking the opportunity today to clarify my own position:

- I do not think that OM was calling KF a Nazi, merely drawing attention to commonality between KF’s apparent views on homosexuality as immoral and unnatural to those of Nazis who also regarded homosexuality as immoral and unnatural. However, I accept that one huge difference is that KF appears to considers that homosexuality is non-genetic and can be cured; whereas Nazis considered that it was genetic and should be eradicated.

- I agree with KF that inflammatory comparisons with those one disagrees with to Nazis is unhelpful and divisive. I will not censor such comparisons, but I will register my objections to them. This includes OM’s comparison (although I find KF’s views on homosexuality morally abhorrent, and factually incorrect, his view is profoundly different to those of the Nazis), and it also includes KF’s frequent comparisons of those of us who hold that a Darwinist account of evolution is scientifically justified to those “good Germans” who turned a blind eye to Nazi-ism.

- When referring to CSI as “bogus” I mean it is fallacious and misleading. I do not mean that those who think it is calculable and meaningful are being deliberately fraudulent. I interpret AF to mean the same thing by the term. However, even if he does not, I defend his right to say so on this blog, just as I will defend KF’s right to defend CSI (or even his views on homosexuality) on this blog.]

I will move this post to the sandbox shortly, but as I am banned from UD, and therefore cannot respond to this in the place where it was issued, I am doing so here. Kairosfocus writes:

Asymmetry

When I started this site, I had been struck by the remarkable symmetry between the objections raised by ID proponents to evolution, and the objections raised by ID opponents to ID – both “sides” seemed to think that the other side was motivated by fear of breaking ranks; fear of institutional expulsion; fear of facing up to the consequences of finding themselves mistaken; not understanding the other’s position adequately; blinkered by what they want, ideologically, to be true, etc. Insulting characterisations are hurled freely in both directions. Those symmetries remain, as does the purpose of this site, which is to try to drill past those symmetrical prejudices to reach the mother-lode of genuine difference.

But two asymmetries now stand out to me:

Andre’s questions

Andre poses some interesting questions to Nick Matzke. I thought I’d start a thread that might help him find some answers. I’ll have first go :

Hi Nick

Yes please can we get a textbook on Macro-evolution’s facts!

I’ll make it easy for you;

1.) I want to see a step by step process of the evolution of the lung system.

Google Scholar: evolution of the lung sarcopterygian

What is ID?

Stephen B, at UD, says:

Since ID does not, at least for now, hypothesize an “intelligent mind,” …

To which I can only respond – WTF?

Seriously, can any ID proponent explain what Stephen means here, and whether they agree with it?

From the UD resources section:

ID Defined

The theory of intelligent design (ID) holds that certain features of the universe and of living things are best explained by an intelligent cause rather than an undirected process such as natural selection. ID is thus a scientific disagreement with the core claim of evolutionary theory that the apparent design of living systems is an illusion.

In a broader sense, Intelligent Design is simply the science of design detection — how to recognize patterns arranged by an intelligent cause for a purpose. Design detection is used in a number of scientific fields, including anthropology, forensic sciences that seek to explain the cause of events such as a death or fire, cryptanalysis and the search for extraterrestrial intelligence (SETI). An inference that certain biological information may be the product of an intelligent cause can be tested or evaluated in the same manner as scientists daily test for design in other sciences.

ID is controversial because of the implications of its evidence, rather than the significant weight of its evidence. ID proponents believe science should be conducted objectively, without regard to the implications of its findings. This is particularly necessary in origins science because of its historical (and thus very subjective) nature, and because it is a science that unavoidably impacts religion.

Positive evidence of design in living systems consists of the semantic, meaningful or functional nature of biological information, the lack of any known law that can explain the sequence of symbols that carry the “messages,” and statistical and experimental evidence that tends to rule out chance as a plausible explanation. Other evidence challenges the adequacy of natural or material causes to explain both the origin and diversity of life.

What possible intelligent causative agency would not have a mind? Does “mindless intelligent cause” mean anything coherent? Apart from, possibly, evolutionary processes? (which could be said, conceivably, to be intelligent but mindless…)

Reductionism Redux

As I’ve mentioned, I’m a great fan of Denis Noble, and recommend his book, the Music of Life, but you can get the content pretty well in total in this video of a lecture:

Principle of Systems Biology illustrated using the Virtual Heart

and there’s other material on his site.

So I was interested to see Ann Gauger making a very similar set of points in this piece: Life, Purpose, Mind: Where the Machine Metaphor Fails.

Wolfram’s “A New Kind of Science”

I’ve just started reading it, and was going to post a thread, when I noticed that Phinehas also brought it up at UD, so as I kind of encouragement for him to stick around here for a bit, I thought I’d start one now:)

I’ve only read the first chapter so far (I bought the hardback, but you can read it online here), and I’m finding it fascinating. I’m not sure how “new” it is, but it certainly extends what I thought I knew about fractals and non-linear systems and cellular automata to uncharted regions. I was particularly interested to find that some aperiodic patterns are reversable, and some not – in other words, for some patterns, a unique generating rule can be inferred, but for others not. At least I think that’s the implication.

Has anyone else read it?

The Chewbacca Defense?

Eric Anderson, at UD writes, to great acclaim

:

Well said. You have put your finger on the key issue.

And the evidence clearly shows that there are not self-organizing processes in nature that can account for life.

This is particularly evident when we look at an information-rich medium like DNA. As to self-organization of something like DNA, it is critical to keep in mind that the ability of a medium to store information is inversely proportional to the self-ordering tendency of the medium. By definition, therefore, you simply cannot have a self-ordering molecule like DNA that also stores large amounts of information.

The only game left, as you say, is design.

Unless, of course, we want to appeal to blind chance . . .

Can anyone make sense of this? EA describes DNA as “an information rich molecule”. Then as a “self-ordering molecule”. Is he saying that DNA is self-ordering therefore can’t store information? Or that it does store information,therefore can’t be self-ordering? Or that because it is both it must be designed? And in any case, is the premise even true? And what “definition” is he talking about? Who says that “the ability of a medium to store information is inversely proportional to the self-ordering tendency fo the medium?” By what definition of “information” and “self-ordering” might this be true? And is it supposed to be an empirical observation or a mathematical proof?

Is Scepticism a Worldview?

During the recent debate with Gpuccio at one point he claimed was that it was my prior adoption of particular ideology or worldview that led me to exclude design as an explanation. Thus reducing our disagreement to a choice of worldviews. I am not sure I know what a worldview is – but scepticism falls far short of being an ideology. All it amounts to is the demand for strong evidence before believing anything. This is just an approach to evidence and is compatible with all sorts of beliefs about the nature of reality.

To take the particular issue of whether life is designed. Scepticism does not exclude design. It just asks that a design explanation is evaluated by the same standards as any other explanation. It is not sufficient that other explanations are considered to be inadequate. If you happen to believe in a designer with the appropriate powers and motivation then you may well accept that as the best explanation for life. If you happen to believe in a designer with evil motivations and sufficient power then that is a perfect explanation for natural disasters. But these are beliefs which need to be separately evaluated with their own evidence. You cannot use the fact that a designer is a good explanation for life as evidence for that designer.

What has Gpuccio’s challenge shown?

(Sorry this is so long – I am in a hurry)

Gpuccio challenged myself and others to come up with examples of dFSCI which were not designed. Not surprisingly the result was that I thought I had produced examples and he thought I hadn’t. At the risk of seeming obsessed with dFSCI I want assess what I (and hopefully others) learned from this exercise.

Lesson 1) dFSCI is not precisely defined.

This is for several reasons. Gpuccio defines dFSCI as:

Gpuccio’s challenge

29th Oct: I have offered a response to Gpuccio’s challenge below.

I think this is worth a new post.

Gpuccio has issued a challenge here and here. I have repeated the essential text below. Others may wish to try it and/or seek their own clarifications. Could be interesting. Something tells me that it is not going to end up in a clear cut result. But it may clarify the deeply confusing world of dFSCI.

Challenge:

Give me any number of strings of which you know for certain the origin. I will assess dFSCI in my way. If I give you a false positive, I lose. I will accept strings of a predetermined length (we can decide), so that at least the search space is fixed.

Conditions:

a) I would say binary strings of 500bits. Or language strings of 150 characters. Or decimal strings of 150 digits. Something like that. Even a mix of them would be fine.

No problem with that.

b) I will literally apply my procedure. If I cannot easily see any function for the string, I will not go on in the evaluation, and I will not infer design. If you, or any other, wants to submit strings whose function you know, you are free to tell me what the function is, and I will evaluate it thoroughly.

That’s OK. I will supply the function in each case. I note that when I tried to define function precisely you said that the function can be anything the observer wishes provided it is objectively defined, so, for example, “adds up to 1000” would be a function. So I don’t think that’s going to be an issue!

c) I will be cautious, and I will not infer design if I have doubts about any of the points in the procedure.

I am not happy with this. If the string meets the criteria for dFSCI you should be able to able to infer design. You can’t pick and choose when to apply it. At the very least you must prove that the string does not have dFSCI if you are going to avoid inferring design.

d) Ah, and please don’t submit strings outputted by an algorithm, unless you are ready to consider them as designed if the algorithm is more than 150 bits long. We should anyway agree, before we start, on which type of system and what time span we are testing.

I don’t understand this – a necessity system for generating digital strings can always be expressed as an algorithm e.g Fibonacci series. Otherwise it is just a copy of the string. Also it is not clear how to define how many bits long an algorithm is. Maybe it will suffice if I confine myself to algorithms that can be expressed mathematically in less than 20 symbols?

And anyway, I am afraid we have to wait next week for the test. My time is almost finished.

That’s OK. I need time to think anyway. But also I need to get your clarification on b,c and d.