Subjects: Evolutionary computation. Information technology–Mathematics.

In “Evo-Info 4: Non-Conservation of Algorithmic Specified Complexity,” I neglected to explain that algorithmic mutual information is essentially a special case of algorithmic specified complexity. This leads immediately to two important points:

- Marks et al. claim that algorithmic specified complexity is a measure of meaning. If this is so, then algorithmic mutual information is also a measure of meaning. Yet no one working in the field of information theory has ever regarded it as such. Thus Marks et al. bear the burden of explaining how they have gotten the interpretation of algorithmic mutual information right, and how everyone else has gotten it wrong.

- It should not come as a shock that the “law of information conservation (nongrowth)” for algorithmic mutual information, a special case of algorithmic specified complexity, does not hold for algorithmic specified complexity in general.

My formal demonstration of unbounded growth of algorithmic specified complexity (ASC) in data processing also serves to counter the notion that ASC is a measure of meaning. I did not explain this in Evo-Info 4, and will do so here, suppressing as much mathematical detail as I can. You need to know that a binary string is a finite sequence of 0s and 1s, and that the empty (length-zero) string is denoted ![]() The particular data processing that I considered was erasure: on input of any binary string

The particular data processing that I considered was erasure: on input of any binary string ![]() the output

the output ![]() is the empty string. I chose erasure because it rather obviously does not make data more meaningful. However, part of the definition of ASC is an assignment of probabilities to all binary strings. The ASC of a binary string is infinite if and only if its probability is zero. If the empty string is assigned probability zero, and all other binary strings are assigned probabilities greater than zero, then the erasure of a nonempty binary string results in an infinite increase in ASC. In simplified notation, the growth in ASC is

is the empty string. I chose erasure because it rather obviously does not make data more meaningful. However, part of the definition of ASC is an assignment of probabilities to all binary strings. The ASC of a binary string is infinite if and only if its probability is zero. If the empty string is assigned probability zero, and all other binary strings are assigned probabilities greater than zero, then the erasure of a nonempty binary string results in an infinite increase in ASC. In simplified notation, the growth in ASC is

![]()

for all nonempty binary strings ![]() Thus Marks et al. are telling us that erasure of data can produce an infinite increase in meaning.

Thus Marks et al. are telling us that erasure of data can produce an infinite increase in meaning.

In Evo-Info 4, I observed that Marks et al. had as “their one and only theorem for algorithmic specified complexity, ‘The probability of obtaining an object exhibiting ![]() bits of ASC is less then [sic] or equal to

bits of ASC is less then [sic] or equal to ![]() ’” and showed that it was a special case of a more-general result published in 1995. George Montañez has since dubbed this inequality “conservation of information.” I implore you to understand that it has nothing to do with the “law of information conservation (nongrowth)” that I have addressed.

’” and showed that it was a special case of a more-general result published in 1995. George Montañez has since dubbed this inequality “conservation of information.” I implore you to understand that it has nothing to do with the “law of information conservation (nongrowth)” that I have addressed.

Now I turn to a technical point. In Evo-Info 4, I derived some theorems, but did not lay them out in “Theorem … Proof …” format. I was trying to make the presentation of formal results somewhat less forbidding. That evidently was a mistake on my part. Some readers have not understood that I gave a rigorous proof that ASC is not conserved in the sense that algorithmic mutual information is conserved. What I had to show was the negation of the following proposition:

(False) ![]()

for all binary strings ![]() and

and ![]() for all probability distributions

for all probability distributions ![]() over the binary strings, and for all computable [ETA 12/12/2019: total] functions

over the binary strings, and for all computable [ETA 12/12/2019: total] functions ![]() on the binary strings. The variables in the proposition are universally quantified, so it takes only a counterexample to prove the negated proposition. I derived a result stronger than required:

on the binary strings. The variables in the proposition are universally quantified, so it takes only a counterexample to prove the negated proposition. I derived a result stronger than required:

Theorem 1. There exist computable function ![]() and probability distribution

and probability distribution ![]() over the binary strings such that

over the binary strings such that

![]()

for all binary strings ![]() and for all nonempty binary strings

and for all nonempty binary strings ![]()

Proof. The proof idea is given above. See Evo-Info 4 for a rigorous argument.

In the appendix, I formalize and justify the claim that algorithmic mutual information is essentially a special case of algorithmic specified complexity. In this special case, the gain in algorithmic specified complexity resulting from a computable transformation ![]() of the data

of the data ![]() is at most

is at most ![]() i.e., the length of the shortest program implementing the transformation, plus an additive constant.

i.e., the length of the shortest program implementing the transformation, plus an additive constant.

Appendix

Some of the following is copied from Evo-Info 4. The definitions of algorithmic specified complexity (ASC) and algorithmic mutual information (AMI) are:

![]()

where ![]() is a distribution of probability over the set

is a distribution of probability over the set ![]() of binary strings,

of binary strings, ![]() and

and ![]() are binary strings, and binary string

are binary strings, and binary string ![]() is a shortest program, for the universal prefix machine in terms of which the algorithmic complexity

is a shortest program, for the universal prefix machine in terms of which the algorithmic complexity ![]() and the conditional algorithmic complexity

and the conditional algorithmic complexity ![]() are defined, outputting

are defined, outputting ![]() The base of logarithms is 2. The “law of conservation (nongrowth)” of algorithmic mutual information is:

The base of logarithms is 2. The “law of conservation (nongrowth)” of algorithmic mutual information is:

(1) ![]()

for all binary strings ![]() and

and ![]() and for all computable [ETA 12/12/2019: total] functions

and for all computable [ETA 12/12/2019: total] functions ![]() on the binary strings. As shown in Evo-Info 4,

on the binary strings. As shown in Evo-Info 4,

![]()

where the universal semimeasure ![]() on

on ![]() is defined

is defined ![]() for all binary strings

for all binary strings ![]() Here we make use of the normalized universal semimeasure

Here we make use of the normalized universal semimeasure

![]()

where ![]() Now the algorithmic mutual information of binary strings

Now the algorithmic mutual information of binary strings ![]() and

and ![]() differs by an additive constant,

differs by an additive constant, ![]() from the algorithmic specified complexity of

from the algorithmic specified complexity of ![]() in the context of

in the context of ![]() with probability distribution

with probability distribution ![]()

Theorem 2. Let ![]() and

and ![]() be binary strings. It holds that

be binary strings. It holds that

![]()

Proof. By the foregoing definitions,

Theorem 3. Let ![]() and

and ![]() be binary strings, and let

be binary strings, and let ![]() be a computable [and total] function on

be a computable [and total] function on ![]() Then

Then

![]()

Proof. By Theorem 2,

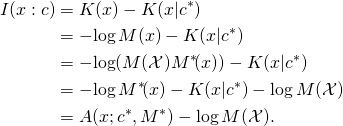

![Rendered by QuickLaTeX.com \begin{align*} &A(f(x); c^*\!, M^*) - A(x; c^*\!, M^*) \\ &\quad = [I(f(x) : c) - \log M^*\!(\mathcal{X})] - [I(x : c) - \log M^*\!(\mathcal{X})] \\ &\quad = I(f(x) : c) - I(x : c) \\ &\quad \leq K(f) + O(1). \\ \end{align*}](http://theskepticalzone.com/wp/wp-content/ql-cache/quicklatex.com-353887b05406e56e1ebf5a09a11ce566_l3.png)

The inequality in the last step follows from (1).

The Series

Evo-Info review: Do not buy the book until…

Evo-Info 1: Engineering analysis construed as metaphysics

Evo-Info 2: Teaser for algorithmic specified complexity

Evo-Info sidebar: Conservation of performance in search

Evo-Info 3: Evolution is not search

Evo-Info 4: Non-conservation of algorithmic specified complexity

Evo-Info 4 addendum

That’s a difficult question. But, as Joe said above, what George is doing looks a lot like Neyman-Pearson hypothesis testing, and thus seems not to be new. However, George does not see what he’s doing as comparison of two competing hypotheses. He sees what he’s doing as testing a single hypothesis. I’ll say that if he’s right about that, then he ought to be able to get the hypothesis-testing logic, minus the ID baggage, through peer review at a journal with mathematical statistics as one of its main topics. Bio-Complexity is definitely not the place to get a strong check of his claims.

As for responding to a small audience, I’ve spent the day posting comments on the other thread, knowing full well that you might be the only person to read them (closely, anyway). Take that to mean that I think well of you.

He has repeatedly equated naturalism with a claim that the universe is Turing-computable. I first saw this in his Alternative Methods to Naturalism (AmNat) talk, “Imagination Sampling.” (I put a lot of work into a post titled “Captain Imagination,” but never finished it. Perhaps there is something salvageable.) There is at least a latent claim, in stuff he’s posted, that nature is a computer running a program, and that the program has to come from somewhere. I’m not going to pore over his old posts, to find support for my impression. Consider also that he says that intelligence literally is a Turing oracle.

I interpret this as saying that Eric believes the laws of physics must be Turing computable. I see three issues in that:

– what if anything limits laws formed by human intellect

– what is the metaphysics of laws of nature, eg necessity versus simple regularities

– how does the universe implement laws; I think computing is different from embodying (eg stomachs don’t compute digestion)

These issues are philosophical. Eric and I have exchanged ideas on the philosophy of science and I believe it is not something he has studied. So unless Eric wants to get involved, I guess it’s probably not useful to speculate further on his views.