I’ve just started reading it, and was going to post a thread, when I noticed that Phinehas also brought it up at UD, so as I kind of encouragement for him to stick around here for a bit, I thought I’d start one now:)

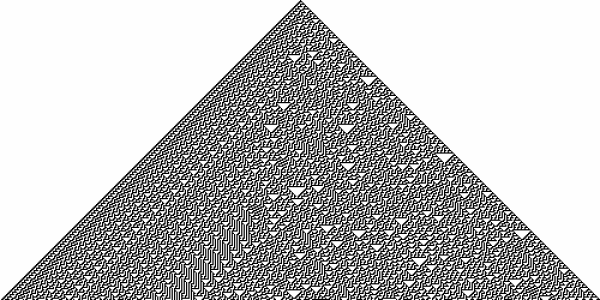

I’ve only read the first chapter so far (I bought the hardback, but you can read it online here), and I’m finding it fascinating. I’m not sure how “new” it is, but it certainly extends what I thought I knew about fractals and non-linear systems and cellular automata to uncharted regions. I was particularly interested to find that some aperiodic patterns are reversable, and some not – in other words, for some patterns, a unique generating rule can be inferred, but for others not. At least I think that’s the implication.

Has anyone else read it?

The pictures are pretty, and to someone new to the field, the ideas are thought-provoking. But I think that in order to have a good perspective on the importance of these ideas and their place in the existing landscape of mathematical thought, one should have a better mathematical background. I can be overwhelmed by textbook mathematics on a graduate level, but that says more about me than about the math.

Oh good. I usually get dropped on with a ton of bricks when I say that.

Patrick,

As I understand it, plenty of intelligent systems have already been implemented in code. Perhaps you are trying to say a self-aware system? That’s something I don’t see happening in my lifetime, and I agree with Lizzie that it would take more than sheer processing power, it would require quite a few sensory-type inputs and a great deal of evolution.

The question I would have is, IF we could build such a system, would it necessarily have the same strengths and weakness of ordinary organic brains – good at pattern matching, lousy at number crunching, for example. I would prefer to see complementary entities, not imitation entities.

I would suggest an intermediate step between “merely” intelligent and self-aware.

I think the breakthrough system, possibly achievable in the next few decades, would be an evolvable system. One that can add to its capabilities.

At the moment most economies depend on networks of arbitrage programs. The more factors they can become aware of, the more money they can make for their owners. Being able to see trends and predict change would be a great competitive advantage.

This, of course, has already been done. But no system that predicts the future is perfect, particularly if it is in direct competition with other predictive systems. It’s a bit of a red queen problem.

I think it’s a no-brainer to predict that systems that make money and are in competition with each other will evolve. A prize is waiting for the system that can self-evolve. To me this does not require hard AI or self awareness.

But it could lead to it.

That’s roughly what I mean by “sentient”, yes.

Interestingly (to me at least), I build enterprise-scale software systems that monitor themselves at various levels and are able to self-heal to a certain extent. For example, if a machine or a rack goes down, new nodes are automatically deployed and system functionality is maintained. I don’t think these systems will evolve into an AI, but they have some minimal self-awareness, for some definitions of the term.

I agree with Lizzie on that point as well.

I’ll go even further. I hope they’re so alien as to be positively weird.

Interestingly I did much the same thing. And no, it had nothing to do with sentience, it had to do with one system monitoring the performance of another, combined with common-interface limited-capacity nodes designed to be interchangeable, and the use of hot-swapping software and hardware protocols.

I doubt it’s a matter of hope. I doubt if the ability to evolve human-style self-deception into such software would ever be possible. If it evolved in, the result wouldn’t be complementary enough for me.