I’ve just started reading it, and was going to post a thread, when I noticed that Phinehas also brought it up at UD, so as I kind of encouragement for him to stick around here for a bit, I thought I’d start one now:)

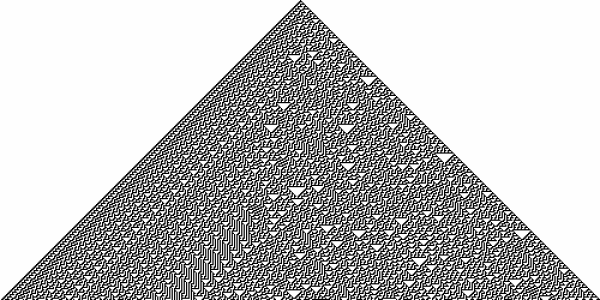

I’ve only read the first chapter so far (I bought the hardback, but you can read it online here), and I’m finding it fascinating. I’m not sure how “new” it is, but it certainly extends what I thought I knew about fractals and non-linear systems and cellular automata to uncharted regions. I was particularly interested to find that some aperiodic patterns are reversable, and some not – in other words, for some patterns, a unique generating rule can be inferred, but for others not. At least I think that’s the implication.

Has anyone else read it?

I’m more interested in the notion that matter/physics/reality may sit on top of little self-sustaining programs (like the gliders in Conway’s Life). The idea that information may have given rise to matter instead of the other way around is intriguing.

I’m not sure what that would mean (“information may have given rise to matter”) – perhaps later chapters will tell me?

I find it rather difficult to draw any detailed conclusions other than the obvious one, that a few simple rules can give rise to complex and unpredictable behavior. It reminds me of something, but I forget what that might be.

Heh. I see what you did there.

Sorry to bring up something off-topic but this thread seemed no worse than any other to do that. Over at UD a comment by Eric Anderson struck me. He has a maxim,

“which is applicable to the larger evolutionary claims:

The perception of evolution’s explanatory power is inversely proportional to the specificity of the discussion.”

I think an article at TSZ challenging this would be a good topic. Discussion of molecular evidence for an evolutionary process (e.g evolution of photosynthesis) is surely very specific in anyone’s book.

What do you guys think?

I do agree with Eric that the *perception* of ToE’s explanatory power may vary.

Designed???

http://d1jqu7g1y74ds1.cloudfront.net/wp-content/uploads/2013/03/PrinceEdwardIslands.A2013085.0645.1km.jpg

Safe for work.

I’ve read parts of Wolfram’s book and didn’t find anything particularly new.

Many of these ideas have been around since at least the time of Poincaré.

I think Wolfram is trying to see if there is a “metapattern” in the emergence of phenomena in condensed matter; emergent phenomena that become the dominant rules governing a given level of complexity. Often the rules underlying complex behaviors are surprisingly simple.

But it is a difficult path to follow. Many of those emergent properties are so highly dependent on temperature and on the surrounding environment in which a system is immersed, and with which it interacts, that it becomes difficult to distinguish what the rules are.

The reviews by physicists of Wolfram’s book have been fairly cool (I’ll see if I can find at least one). Wolfram is a smart guy, but he doesn’t appear to have hit on anything significant.

However, if one hasn’t encountered these ideas before, it is a nice read.

Groan!

Here’s my favorite designoid cloud:

The “Morning Glory” over the Gulf of Carpentaria

Haven’t read the book, although I attended a presentation by the guy at a US uni, where I was in a graduate program at the time.

Frankly, before investing time (and money, but mostly time – the book is enormous), I would have checked out reviews. Opinions from specialists seem rather sparse and more on the negative or indifferent side. As someone put it, what is right in this book is not new, and what is new is not right.

Not much seems to have happened since Wolfram was on his book tour. Either he is way ahead of his time (as he probably thinks – the guy seems to be a bit of a megalomaniac) or this is a dud.

The book is huge. Useful for flattening crumpled things, anyway. Or propping doors open. Although I have Signature in the Cell for that.

I really enjoyed Ray Kurzweil’s essay on the book here

More to digest there than there may be in the book!

Thanks for that link, Lizzie. I enjoyed it too on first reading, and I’ve no doubt I’ll re-read it several times.

Not sure I’m ready for Wolfram’s book, though. I’m rich in years, but poor of Experiences, and may have to bypass really massive tomes until/unless immobilised

Fortunately there are lots of pictures, and the pictures really are beautiful.

I am of the opinion that chemistry is more complex than simulations of chemistry, and chemistry is and always will be faster than simulations of chemistry. There is something “spooky” or “entanglement-like” in protein folding.

Mike can jump in here and tell me I’m full of it.

What I’m thinking was triggered by Penrose, but not the same. I’ve tried in my intellectually limited way to find out if anyone thinks folding is a quantum computation involving entanglement, but I don’t have enough knowledge to understand the answers.

I have long thought that there must be some fairly fundamental reason why neurons having a clock rate millions of times slower than transistors can nevertheless outcompute trsnsistors on certain kinds of tasks.

I’ve been told by AI geeks that the theoretical problem has been solved, but the hardware is difficult to build. I’m not convinced.

They can’t. It’s an illusion. What the brain is doing is very different from what people take it to be doing. Or at least that’s how I see it.

I would agree 🙂 Also, it’s awesomely efficient.

Also, 100 or so billion neurons, and synapses between them in the trillions, being constantly adjusted in strength. Also, synapses are not the only communication channel between neurons – oscillating extra-cellular dentritic currents also affect neural firing.

I wonder if Wolfram has ever set up a cellular automaton in which the rules on the next iteration changed as a function of the pattern in the previous? Possibly – I’m not that far through it yet!

I don’t disagree. I doubt the theoretical problem has been solved. I suspect that Penrose is on to something, but he doesn’t seem to have nailed it. There is a project at Stanford called Brains in Silicon that claims to have created cheap, low power silicon neuron equivalents. Assuming they have, what next?

My opinion is that constructing even a simple brain is a problem equivalent to constructing a simple self-replicator from first principles.

I doubt it can be done without some evolutionary method.

I think Penrose is on to a red herring, myself. But I would agree about the evolutionary method. In fact, I’d say that brains build themselves by a quasi-evolutionary method.

I agree (while entirely lacking the qualifications to judge) that it seems wrong to attribute entanglement between molecules as the basis of consciousness, but I think something like entanglement is operating while proteins fold.

Again, I’m way over my head, but I think this is why we can’t simulate folding except at great cost, and why we can’t do biological design in the way imagined by some ID advocates.

It’s inviting hubris to say so, but I honestly don’t think consciousness is that complicated (possibly less complicated than folding proteins!) I think it seems complicated (or “Hard”) because we are trying (often) to ask an incoherent question. That’s why I coined the verb “conching” – if we think of being conscious as something we do, rather than something we are, and if, moreover, it’s a transitive verb, i.e. it requires an object (I conch this screen, the wind blowing, the bump on my head I just got when I tried to walk through an up and over door that wasn’t over an up enough, just now, because, presumably, I hadn’t conched its downness) – then I think, firstly, the problem becomes more tractable, and secondly, still captures pretty well everything we want to capture in the traditional notion.

Including qualia, even.

I agree on all points.

If it’s something we do, then strong AI is just a matter of building a doer. I think it’s an emergent property of “chemistry,” and is unlikely to be achieved any time soon in silicon.

Even though we will build better an better doers.

Ah,but it’s a particular kind of doing. When I “conch” something, I take an attitude towards it, which in turn means that I consider a number of possible ways of interacting with it, based on what I have learned from past interactions with it, including the outcomes of those actions. Which means, first of all, parsing it as a “thing”. And if I manage to “conch” myself, then I became “self-conscious”.

I completely agree.

Well yes, that was Skinner’s definition of consciousness. Maybe Hofstader’s also. One can imagine building a computer capable of this, just as one can imagine faster than light travel and time travel.

I just think it will be much harder than most people imagine. And I think that one reason is it is hard to simulate chemistry.

I think people who see neurons as switching devices miss something important. I have for decades thought that there is a necessarily analog component to brains. Timing and strength That seems to me to form the basis of memory and neural computation.

What manages that, and how finely do we need to simulate it? How on earth do we simulate a working system, even if we manage to build artificial neurons? That’s the part that I think is as difficult as assembling a replicator from first principles.

I suppose it could be one of those things that will look really simple in retrospect.

Are you familiar with recursive artificial neural networks? When you feed the output back into the network you can model some interesting time series like phenomena. I’m not up to date on the latest in that area, though.

I’d like to think it will, but it could look more like quantum mechanics compared to Newtonian mechanics.

I wonder how important our senses and biological nature are to what we consider “intelligence”. We might be able to develop sentient software, but without the rich inputs we get from the world and the bath of hormones we hold in our skin, that intelligence might be quite alien to ours.

We know quite a bit about atoms, but cannot as yet figure out how to assemble them into a plausible simple self-replicator. If we succeed, the finished product might evoke “of course!”

Artificial brains need at least two capabilities we haven’t mastered. One is the ability to program themselves by modifying connections, and the other is the ability to be extended in size and complexity without succumbing to software bloat.

My thought is we haven’t really figured out how to bootstrap evolution, and my intuition is that it will be difficult to do as information processing as opposed to chemistry. Not that chemistry is easy. It’s just my gut feeling that chemistry embodies phenomena that are too complex to model as information. The emergent properties don’t scale as information.

Perhaps my thoughts on this are silly and incoherent.

That I agree with. I suspect that once one self-replicator is found, we’ll find other families of them shortly thereafter.

Unless I’m misunderstanding you, several neural network architectures and at least one genetic programming technique meet your first criteria.

The second is the real problem, and where I agree with you that some form of evolutionary mechanism will be required to get to the necessary level of complexity.

One alternative from a science fiction story I dimly remember is that the first sentient machines will come from sentient meat. As technology advances, we’ll achieve the ability to augment our brains with non-organic hardware, both to repair damage and to improve upon nature. At some point the percentage of original organic material will become vanishingly small, and the body containing it can be shut down. Et voila — a sentient machine.

And yes, that story does assume some significant hardware advances.

My hunch (more than a hunch, really) is that an AI machine needs sensorimotor capability – in other words it needs to be able to control its own data inputs (from the environment, virtual or real).

I’t’s all speculative. I’m just a pessimist when it comes to AI, and to some extent OOL.

I don’t expect breakthroughs in my lifetime (20-30 years), but I’d welcome being wrong.

I assume that brains evolved to control movement and to manage homeostasis. Reflexes would evolve into emotions. Onion like layers of managers. Not entirely unlike corporations.

Of course corporations get bloated and die. Their corpses are assimilated by other corporations. Sometimes pieces of their DNA get assimilated into the feeding corporation’s genome.

Lizzie,

Pray Gary Gaulin never finds this..

I sometimes wonder whether a sentient being might evolve in virtual space, now that virtual space is so rich. It could make a good SF story.

Gary has assembled a mishmash of disparate ideas, some of which are not wrong, into a private worldview that is impervious to outside criticism. A “normal” person (myself included, of course) recognizes fantasy. Nothing wrong with fantasy as long as it’s recognized as such.

The semantic web will only accelerate that.

http://dbpedia.org/About

I think Arthur Clarke beat you to it. I don’t think he was first.

And of course the Terminator movies are based on this thought.

The first clue might be if switching networks began modifying their passwords to prevent human intervention.

My own fantasy is that expert systems will eventually become so necessary for human survival that they can never be turned off or modified except by their own learning algorithms.

The rest is Hollywood.

I do think politics will be very different in a hundred years. I think humans will lose control of bits of infrastructure such as banking and credit. Forbin will see to it that humans cannot screw it up.

I call my GPS Forbin. It knows better than I do how to get places.

Too late already! Well, almost 😛

https://en.wikipedia.org/wiki/Watson_(computer)

I think we’ll see an emergent AI in my lifetime. Maybe a Botnet?

Most likely candidate:

http://en.wikipedia.org/wiki/Conficker

For various definitions of AI. I don’t think any strong version of AI can be implemented in code.

By strong I don’t necessarily mean self-aware. Just able to extend itself indefinitely. I can’t even imagine the economic value of a program that could write programs that are not trivial and which solve arbitrary problems.

petrushka,

No: I wouldn’t say you are full of it. Protein folding does indeed appear to be “spooky;” but that is because binding sites are so complex that the movement of membranes and polymer chains can appear to be “purposeful.”

If we go back to that little high school scaling-up exercise where we give kilogram masses the same charge-to-mass ratios of protons and electrons, we find that the potential energies of interaction between such masses, separated by distances on the order of a meter, is on the order of 10^26 joules or 10^10 megatons of TNT.

Such soft matter molecules are also immersed in liquids, such as water, that provide a background temperature corresponding to kinetic energies of vibration that are of the same order of magnitude as the binding energies of these molecules. Those liquids also become polarized by the presence of these molecules.

So with that kind of jostling, and with literally thousands of charged sites along a chain and on the surfaces of a membrane, there are lots of things that can occur. We can see chains bending and coiling, literally crawling along themselves as though they are following some purposeful objective. Most of the configurations achieve some kind of minimum potential energy in the presence of all that jostling.

It has little to do with “entanglement” in the quantum sense. Charge redistribution occurs when two or more “neutral” atoms or molecules are in close proximity; and that charge redistribution is constrained by rules of quantum mechanics. Bonding and binding energies become complicated “mixtures” of quantum mechanical rules and the stresses that occur when geometrical arrangements don’t quite achieve minimum potential energies in the environment in which a structure is immersed.

A very simple example is the 104.45 degree angle between the hydrogen molecules in H2O. When these molecules form a snowflake crystal, it is a stressed, six-sided shape. If it tried to form a sheet – which a real six-sided structure (e.g., graphite) could do – it has to buckle out of the plane in order to bond with adjacent molecules; and that leads to all sorts of complex structures and interactions with other dissolved molecules.

Another example that one can actually watch is to burn a flat piece of paper and see it buckle into complex shapes as it burns. You are watching tradeoffs between bonding angles between atoms and molecules and the macroscopic geometric shape of the entire structure.

Some of the simpler structures can be modeled on a computer, but as the structures get increasingly complex, computing power has to increase at least exponentially if not as a factorial.

I have to accept the “not really spooky” verdict, but you seem to have confirmed that it is too complex to compute with any existing hardware.

If I have any valid and interesting point at all, it is that you can’t model or simulate chemistry in a way that allows prediction of emergent properties.

Whether this is relevant to AI, I don’t know. I’m told by geeks in the AI field that that the necessary hardware is not available and not on the near horizon.

Out of curiosity, why not? Given sufficient computing resources, it should be possible to model the neurons and connections in the brain. With high enough fidelity, that model should exhibit the same behaviors as the brain, including intelligence.

That would be at least the economic value of every programmer currently working.

Well, my view is that to get AI you need more than a virtual brain, you need a virtual body too. It is organisms that are intelligent, not brains, even though some bits of us are more dispensable than others.

And yes, I know that you can be “locked in” and still intelligent – but you are still benefiting from sensory inputs, as well as the hard (and sometimes soft) wired networks that are there because you were once, or evolved as, a whole intelligent organism.

I tend to agree, although I think that the sensors required by the brain could be considerably more varied than our meager five. To take an extreme example, consider the possibility of intelligent interstellar gas clouds. Then again, perhaps I read too much science fiction.

My question to Petrushka was more focused on why implementing an intelligent system in code would be impossible.

That’s a lot to give. We simply don’t have the hardware to model the way brains work. Stanford claims to have a chip that models 65,000 neurons. That’s about three orders of magnitude short of an insect brain.

The problem isn’t just neuron count, it’s the connections, which scale exponentially.

Then you have the small problem of programming. Saying that intelligence will self assemble is a bit like saying cells will self assemble. Maybe, but evolution can be rather slow and require some yet unknown sequence of conditions.

Yes; given computing as we currently understand it, that is essentially correct.

There currently exist supercomputer programs that simulate condensed matter systems such as the formation of galaxies, the formation of chemical compounds, nuclear explosions, fluid dynamic systems, plasmas, and a number of other kinds of strongly interacting systems.

The galaxy example is a good one that illustrates the issues. There are many competing processes that are taking place when matter in the universe condenses into galaxies. Star formation and evolution produce supernovae that tend to blow apart the condensation due to gravity.

The problems come down to issues of scale. It is possible to zoom in on a small scale and compute to high precision what is going on at small scales. And you really need that precision because small errors can quickly magnify up to very large errors as successive steps of the simulation proceed. That alone takes up much computing power; so expanding the scale of computation simply gobbles up memory, time, and computational resources.

But if you can zoom out and use some “higher” level rules to describe those small-scale processes, you can continue computing at a larger scale.

The problem comes in establishing what those higher level rules will be. As matter condenses, those rules emerge; they are not self-evident from the properties of the constituents themselves. So simulations take place at numerous levels; a “microscopic” scale to see what emerges, then larger scales using the rules that emerge at the smaller scale.

But with condensed matter, those rules emerge rapidly at every scale. Even the simplest molecules have properties that are nothing like their individual atoms. How charge redistributes in an atom in close proximity with another atom is nothing like how charge redistributes between compounds; and there are billions upon billions of combinations.

We have to learn the properties of the building blocks at each level in order to proceed up the ladder of complexity. Computing requirements expand factorially if we don’t make use of the higher level rules that come to dominate further evolution at each level.

There has been some progress in connecting emergent properties with the properties of the underlying constituents making up relatively simple compounds. But here again, the connections don’t usually take into consideration the temperature; they are “static” in the sense that they simply apply to fixed geometric arrangements of atoms at given temperatures.

Soft matter systems are far more difficult because the kinetic energies are comparable to binding energies; and the structures and their properties are extremely sensitive to small differences in temperature and to their interactions with their environments.

The research in condense matter systems is combination of experiment, simulation, mathematical theory, and the study of analog systems that may or may not shed some light on other systems. Even with such phenomena as “simple” as superconductivity we have a mathematical theory for low temperature superconductivity – the Bardeen, Cooper, Schrieffer (BCS) theory of superconductivity. But there is not yet a very good explanation, or set of explanations, for superconductivity at high temperatures.

And so it goes with just about any condensed matter phenomenon you can name; and there are billions upon billions of them. That is why condensed matter physics is such a rich area of study; you simply can’t run out of phenomena to study.

Ah, so we only have to use 1,000 of them! Alternatively, we could wait 15 years for Moore’s law to get us to that level. 😉

Whimsy aside, in a couple of decades the hardware may not be the limiting factor.

True, and a much larger problem. However, they can still be modeled even if they can’t all (yet) be implemented in actual hardware. The resulting system would run slower than a full hardware solution, but the model could still be accurate.

The modeling approach sidesteps the need for explicit programming by simply replicating what we observe in organic brains.

All that being said, I used that example as one way of implementing an AI in code, assuming the availability of appropriate hardware. I see the practical problems, but I don’t see anything that absolutely prohibits creating such a model. Therefore I see no reason to think that intelligence can’t be implemented in software.

My personal gut feel is that AI will emerge from sufficiently complex models of thought processes rather than of brains themselves. I can’t support that feeling with evidence, though.