As The Ghost In The Machine thread is getting rather long, but no less interesting, I thought I’d start another one here, specifically on the issue of Libertarian Free Will.

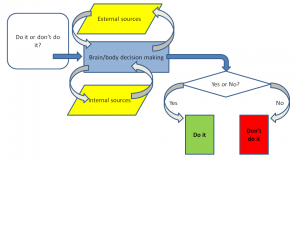

And I drew some diagrams which seem to me to represent the issues. Here is a straightforward account of how I-as-organism make a decision as to whether to do, or not do, something (round up or round down when calculating the tip I leave in a restaurant, for instance).

My brain/body decision-making apparatus interrogates both itself, internally, and the external world, iteratively, eventually coming to a Yes or NO decision. If it outputs Yes, I do it; if it outputs No, I don’t.

Now let’s add a Libertarian Free Will Module (LFW) to the diagram:

There are other potential places to put the LFW module, but this is the place most often suggested – between the decision-to-act and the execution. It’s like an Upper House, or Revising Chamber (Senate, Lords, whatever) that takes the Recommendation from the brain, and either accepts it, or overturns it. If it accepts a Yes, or rejects a No, I do the thing; if it rejects a Yes or accepts a No, I don’t.

The big question that arises is: on the basis of what information or principle does LFW decide whether to accept or reject the Recommendation? What, in other words, is in the purple parallelogram below?

If the input is some uncaused quantum event, then we can say that the output from the LFW module is uncaused, but also unwilled. However, if the input is more data, then the output is caused and (arguably) willed. If it is a mixture, to the extent that it depends on data, it is willed, and to the extent that it depends on quantum events, it is uncaused, but there isn’t any partition of the output that is both willed and uncaused.

If the input is some uncaused quantum event, then we can say that the output from the LFW module is uncaused, but also unwilled. However, if the input is more data, then the output is caused and (arguably) willed. If it is a mixture, to the extent that it depends on data, it is willed, and to the extent that it depends on quantum events, it is uncaused, but there isn’t any partition of the output that is both willed and uncaused.

It seems to me.

Given that an LFW module is an attractive concept, as it appears to contain the “I” that we want take ownership of our decisions, it is a bit of a facer to have to accept that it makes no sense (and I honestly think it doesn’t, sadly). One response is to take the bleak view that we have “no free will”, merely the “illusion” of it – we think we have an LFW module, and it’s quite a healthy illusion to maintain, but in the end we have to accept that it is a useful fiction, but ultimately meaningless.

Another response is to take the “compatibilist” approach (I really don’t like the term, because it’s not so much that I think Free Will is “compatible” with not having an LFW module, so much as I think that the LFW module is not compatible with coherence, so that if we are going to consider Free Will at all, we need to consider it within a framework that omits the LFW module.

Which I think is perfectly straightforward.

It all depends, it seems to me, on what we are referring to when we use the word “I” (or “you”, or “he”, or “she” or even “it”, though I’m not sure my neutered Tom has FW.

If we say: “I” is the thing that sits inside the LFW, and outputs the decision, and if we moreover conclude, as I think we must, that the LFW is a nonsense, a married bachelor, a square circle, then is there anywhere else we can identify as “I”?

Yes, I think there is. I think Dennett essentially has it right. Instead of locating the “I” in an at best illusory LFW:

we keep things simple and locate the “I” within the organism itself:

And, as Dennett says, we can draw it rather small, and omit from it responsibility for collecting certain kinds of external data, or from generating certain kinds of internal data (for example, we generally hold someone “not responsible” if they are psychotic, attribution the internal data to their “illness” rather than “them”), or we can draw it large, in which case we accept a greater degree (or assign a greater degree, if we are talking about someone else) of moral responsibility for our actions.

In other words, as Dennett has it, defining the the self (what constitutes the “I”) is the very act of accepting moral responsiblity. The more responsibility we accept, the larger we draw the boundaries of our own “I”. The less we accept, the smaller “I” become. If I reject all moral responsibility for my actions, I no longer merit any freedom in society, and can have no grouse if you decide to lock me up in a secure institution lest the zombie Lizzie goes on a rampage doing whatever her inputs tell her to do.

It’s not Libertarian Free Will, but what I suggest we do when we accept ownership of our actions is to define ourselves as owners of the degrees of freedom offered by our capacity to make informed choices – informed both by external data, and by our own goals and capacities and values.

Which is far better than either an illusion or defining ourselves out of existence as free agents.

William,

Your error is in thinking that a physically-based computation cannot also be a valid one. It’s a silly argument to be making in the 21st century, especially on the Internet. You might as well argue that we can’t trust computers to balance our checkbooks because the “addition” that computers do isn’t valid. They’re just “compelled” to do what they do by the laws of physics, not because what they are doing is “true addition” according to mathematical laws. Their “addition” is as meaningless as: wind blows, leaves rustle.

Meanwhile, it’s amusing that you characterize others as “biological automata” when your own behavior in this thread is neatly encapsulated by the following robotic algorithm:

10 Repeat assertions.

20 Ignore counterarguments.

30 GOTO 10

William J. Murray,

Yes.

If a decision is so finely poised that a pizza makes the difference, I agree, that not much willing has been done. As for the “chaotic” part, I think the problem here is that you don’t understand non-linear systems. It’s a shame they got this name “chaos”, although I sort of like it. But I think you may be assuming that a chaotic system can’t be intelligent.

I’m not asking for a “you” than can “over-ride”. I don’t want to “over-ride” with some informed random whim. I want to make good, informed decisions. You keep sidestepping my question:

How can a decision be both uncaused and informed?

It’s true that it’s not easy for a crazy person to choose to be rational, which is why psychiatry is a challenging domain. But yes, we can help people be more rational, and yes, sometimes that can rise to the level of a choice.

The problem here, William, that you seem unable to distance yourself to see, is that while you yourself explicitly accept that we choose our beliefs on the basis of whether they are useful, rather than whether they are true, and point out that belief in self-efficacy (as I would put it) is much more conducive to mental health than belief that one is a “BA”, you seem unable to conceive of any alternative model of self-efficacy that one might believe in than your own “LFW” one. I don’t believe in “LFW” – for the simple reason that it makes no logical sense to me: it seems an oxymoron, unless you can explain how a decision can be both informed and uncaused. Mine may seem equally a-logical to you, but the fact is that it works just fine. I do not need to conceive of my Self as a loosely flapping final decider uninfluenced by any information; instead I conceive of my Self as the information gatherer-and-sifter, as enabled by the organism I call “me”.

Which is just fine. I’d much rather be a reliable and responsible material computation than an informed despot, overturning the well-researched recommendations of my cabinet for no cause at all.

There are a gazillion error checks within ths system – and, indeed, none outside it. LFW cannot be an error checker because it has no information-gathering apparatus or criteria by which to check. By locking yourself into this “butterfly wind and pizza” model you are completely missing what a material decision-making process might be. It’s like dismissing a computer as a chaos of circuits and lights. Forget that word “chaos” – its seems to be confusing you. Focus instead on the words “iterative” and “feedback”, which are essential to chaotic systems, but are also essential to intelligent systems (by which I mean systems that output smart decisions from input). Not all chaotic systems are intelligent, but I suggest that all intelligent systems are chaotic.

Absolutely they are. Often the output is an equation. In what sense is an equation not a ‘”true” statment in any meaningful sense”? What else is an equation if not that?

Computed output is just fine with me. I’d rather compute an output than flail.

William, I have to say this: you do not understand the position of the people you are disagreeing with. This is is a complete straw man. You think that you are seeing the “real” position we hold while not seeing it ourselves.

Consider the possibility that you are failing to see the position we hold.

Now: how can a decision be both uncaused and informed?

I don’t understand this question.

I do not know what the conclusion of any decision-making process I embark on will be in advance. If I did, I wouldn’t have to embark on a decision-making process! But that doesn’t mean that I never reach a decision – it means I have to go through the “chaotic, non-linear” process to get there.

Well, I don’t think there is a “linear objective truth”. I don’t know what that would mean. Regarding perception, I think we fit models to data, and the closer the fit, the closer, we infer, we are to reality. As for action – there is no “truth” about action – we just make the best decision we can, given our options and goals. Both those outputs are perfectly understandable as outputs from a “material computation”.

There is no need (and no use, I w ould argue) for an extra module.

I think you need to know a little more about non-linear systems.

As an example, William:

There is a great deal of interest these days in “machine learning” – for example in using computer algorithms to help discern, for instance, whether a person has a particular medical or psychiatric condition, or, more usefully, how likely they are to respond to a particular course of treatment or what the prognosis is likely to be.

These algorithms are often non-linear (the best ones are), and, indeed, “chaotic”. They are initially “trained” on a data set where the answers are given , then test on new data, where the answers are not given.

Sometimes very high accuracy rates are obtained. The real test, however, is to try them on data where nobody yet knows the answer (prognosis, say) but where we can test the prediction later.

This is essentially how I suggest intelligent agents come to decisions, which can be both perceptual (“is it a bird? is it a plane?”; “is he guilty or not guilty?”) and plans for action (“strawberry or vanilla?”; “treat or don’t treat?”).

What you seem to want is some additional module that can overturn the output of the computation or confirm it. Well, fine.

But what criteria or information is it coing to use that could not be available to the computation? And if none, what use is it?

Under materialist computation, the “Liz” computation will understand or not understand whatever its particular computation dictates. I cannot predict how the “Liz” computation will react to what I say. A sound argument and good evidence might as easily embed the “Liz” computation further into its current position, whereas a lollipop and and som Vivaldi might change your worldview. Who knows? If you “understand” something I write, the content of that understanding will be whatever the particular, idiosyncratic computation locally labeled “Liz” generates – good, bad, nonsensical, whatever.

This is a nonsensical question. It’s like asking me how a bagel can both be unbuttered and have jelly on it. Information is not necessarily a sufficient cause for anything to happen. The only thing I can say here is that you must be assuming the consequent to even think the question doesn’t represent a non-sequitur – that information **is** sufficient to cause a decision. From my perspective, decisions (at the free will origination point) cannot be sufficiently caused by “information” or else it is not a decision at all – it’s a computation.

I think you may be assuming a meaning for “intelligence” that you do not have available to your worldview. “Intelligence” is nothing more, and can be nothing more, than whatever physics materially computes. You think of it, and I think of it, exactly what our physics computes us to think of it (under materialism) and nothing more.

Your computed concept of what “chaos” is, and what “intelligence” is are not superior to, or “more accurate” than, whatever I think of them, because both of us think of them exactly whatever our individual, idiosyncratic physical computations compel us to. Under your worldview, there is no absolute arbiter available, nor any means of the computation to make absolute evaluations, by which the “WJM” and “Liz” computations can check the accuracy or truth-value of their respective computations for “chaos” and “intelligence”.

In the sentence I quoted, you are rampantly stealing concepts not available to your worldview by inserting semantics that only have significant values outside of your worldview. There is no “WJM” that is “assuming” incorrect values for “intelligence” or “chaos”; there is a computation of physics that produces whatever it produces without any comparative arbiter or means to access such.

Then the question remains, why are you arguing with me? Why should one computation of physics argue that another computation of physics is “wrong”? My computation cannot be wrong; according to materialism, nothing I say, do, think, believe, or conclude can violate the physics computation that produces it; whatever I think, say, believe and conclude is the perfect manifestation of physics as it computes through whatever idiosyncratic pathways it happens to develope in my local case.

So, why are you debating me? Why are you arguing “as if” I, or anyone else, is in “error”? According to your paradigm, I only believe whatever physics computes to exist as my beliefs. If you do not like a sunset, do you start debating the sunset to get a different set of colors? If you do not like the weather, do you debate the air? Why do you want to change what physics happens to have wrought in my particular location?

Unfortunately, the computation known as “Liz” has no way of knowing if the computation known as “Liz” is reliable and responsible, other than such views simply being generated by the computation, which can as easily be computed for anyone – including despots and the deranged. If materialism as you argue it is true, then all biological automatons that believe they are reliable and responsible, including the deranged, despots and the devout, arrive at that perspective the exact same way you do – they are compelled by physics to think such.

Which makes your quoted statement above another case of rampantly stealing concepts you have no right to use. SO WHAT if your computation makes you think that you are reliable, responsible, right, rational, and realistic? Jeffrey Dahmer’s computation could as easily make him feel the same way. Same with all your debate opponents. Same with Hitler, Stalin, and Mao. Same with any Ayatollah or predatory criminal.

Your assertion has no value when stripped of the language you have no conceptual right to. All you are saying, under materialism, boils down to “blah, blah, blah”, same as what anyone else is saying, produced by the same forces, incapable of being arbited by anything and with anything other than the selfsame system of personal, relative views.

Sure. What’s the problem with that? And what’s “locally labeled” about the Liz? I’m pretty universally labeled that (well, Lizzie, or Elizabeth, rarely Liz – but I know who you mean :))

I don’t agree that it is a nonsensical question. I didn’t say that information was a suffient cause for anything to happen (although it may be true that nothing can happen without a transfer of information – depends on how we define information).

So let me phrase it differently:

You say that a decision that a “decision” that is output by a computation is not a decision at all.

What, then, in your view, is the difference between a computation that outputs what I would call a decision (for example, an estimate as to which of two actions is most like to achieve a given goal), and what you are calling a decision?

Your use of language is misleadingly sloppy here, William. “Intelligence” is not, in any regular usage “whatever physics materially computes”. You are conflating the output of a computation with the whole computational system, including inputs and outputs. A system that computes which of a set of possible action is most likely to bring about a computed goal, given lots of inputs, including inputs that are sought as a result of a previous output, is, arguably, an “intelligent” system. It certainly behaves in a way that we tend to describe as “intelligent”, whether applied to a robot, or a weather-forecasting system, or a living animal.

Similarly, a system that parses the physical world into discrete objects, bound to properties, that allow good predictions to be made, is an intelligent system, with an effecte perceptual system – can judge that this is A, and that is not-A.

I’m not sure what kind of decision-making you are talking about, but it seems to be something to do with arriving at true statements about the world. Is this correct?

If so, I’d say both material comput-ers and live comput-ers are incapable of arriving at true statements about the world, but extremely capable of making good predictive models, the accuracy of whose predictions can be tested, and, if found reliable, to indicate an underlying reality.

I find this a non sequitur.

No, but there are predictions and data. If my predictions fit the data better than yours, then we can infer that my model is a better model of some underlying reality. So models are able to be evalulated, they just won’t ever be completely error- free.

I deny the charge. I could equally say you are stealing my concept, which is of an informed decision-making agent consisting of data-gathering and goal-generating systems in which actions or perceptual models can be error-tested, and trying to give some uncaused homunculus the credit. Of course in my view there is a WJM, just as there is a sun and a moon, and an earth, and a house and a tree and a city, and justice and love, and art and music and geology and the UK and me. We parse the world into things with properties – we are not “stealing” when we do this, and language (and thus semantics) is the tool we as humans use not only to communicate our parsings but to contribute to them.

Why shouldn’t it? Error monitoring and correction is one of the things that intelligent material systems do, perfectly successfully. And my error-monitoring system is detecting an error in your reasoning, because you are unable it seems to explain how a decision can be anything other than the result of a computation that takes into account vast amounts of information, and simulates lots of different outcomes, and weighs them against current goals. What else could do this, other than a computation?

Another non sequitur, William. Just because a computation can produce an error doesn’t mean that there is no such thing as an error! A machine-learning algorithm starts by making a great many errors, then reduces them. A good machine will make a few errors, but, hopefully, very few. Just because your physical system works at one level (outputs a decision) doesn’t mean that it works at another level (outputs the correct decision).

Because I think you are incorrect 🙂

Well, in your case, your physics has made an error. I’m trying to fix it for you.

If debating the sunset induced a nicer set of colours, sure I’d do that. If debating with the weather would persuade it to rain at night. and the clouds clear away in the morning, I’d do that. However, it doesn’t seem to work. Arguing with a person however, makes a huge difference to the physics of their brains. In fact almost everything they encounter does. So there’s a reasonable chance that arguing with you will change your mind.

Check out my diagrams again 🙂

Liz is not “a computation”. Liz computes. And Liz (well, Lizzie) can test, and does, her computations every moment of the day, against new incoming data, which sometimes are sought as a result of the output of a prior computation.

That’s how Liz[zie] becomes reliable and responsible – she learns what predictions can be relied on, and what can’t – which models work, and which don’t.

What does “compelled by physics” mean? Does that mean “unpersuadable”? If not, in what sense are they “compelled”?

I honestly think the problem here is that you are conflating different levels of causal explanation, and saying that because at one level, we can describe a process in terms of neurons, or ions, or quarks, or whatever, there is can be no other level of description without “stealing” someone else’s concept.

But there’s no “stealing” going on. It would be wildly inefficient to explain why a balloon burst in terms of the actual brownian motion of every actual gas molecule in the balloon – instead, we “steal” a concept called “pressure”, even though no “pressing” is going on, when considered at the molecular level – just haphazard motion in all directions. Same with a weather system – we talk about a “slowly moving depression” or a “ridge of high pressure”, or a “developing storm system”. Similarly, I say “I wrote this post”. It’s the level of analysis that has greatest causal efficiency – “I” have reasonably stable properties, and if I act in a certain way, certain things are likely to happen. I am thing, in other words, and my properties include being able to make other things happen.

Indeed. So is that the core of your argument – that a mere computation can’t tell you who is morally right (as opposed to making verifiable predictions)?

I would agree, tentatively (depending on what we mean by the terms) but how does your LBW tell you what is morally right? What is it we are supposed to have “stolen”?

I don’t think you have understood what I am saying.

Concept stealing. There’s nothing for “liz” to be other than the ongoing computation. Liz doesn’t compute anything; Liz is the ongoing computation. Liz is not a separate entity from the ongoing computation.

IOW, all you are saying when you say “Liz computes” is “the computation computes”. Liz = computation.

Why “stealing”? From whom? Why can’t I call the organism typing this post “Liz” (or Lizzie, preferably)? Who says that if I do, I’m “stealing”? I don’t want to be “a separate entity” from the computation that the organism-called-me is doing. One of the properties of Lizzie qua organism is that she computes.

Nope. I’m saying that you are using terminology and concepts via appropriated terminology that your premise eradicates. IMO, you think that because you are intellectually holding to materialism, and because you use the terminology of “I” and “will” and “morality” as if they are pretty much the same thing as when a non-materialist uses those terms, that materialism **must** therefore be able to produce such conceptual distinctions.

For instance, you use the word choice and decision as if it is the same as a computation; as if it is necessarily the same thing. It’s not the same thing under non-materialism as materialism. Same with “will”, “I”, etc. – those are not the same concepts at all under the two different schema.

Physics doesn’t make errors. This sentence is nothing but stolen concepts materialism has no basis for. Physics produces both the output AND the idiosyncratic sensation that the other person is in error. But, there is no “error” other than as an arbitrary label applied to something that is different from the thing doing the labeling. Are maple leaves “in error” according to pecan tree leaves? There is no standard that is a “true” leaf.

That’s all beliefs are under materialism – physical features, shaped like leaves from the computation of the individually idiosyncratic process. To claim that one belief is false and another true deeply relies upon concepts and resources not available to the materialist worldview – in fact, it relies upon concepts necessarily negated under that paradigm.

Stop telling me I’m a computation. I’m not. I’m an organism that computes. One of the things is I compute is a model of myself, and I call what that model models “me”. But I do not mistake the map for the territory. What I model, when I model my self is Lizzie-the-organism.

Indeed, I’d say that it is when an organism (or machine, possibly) becomes capable of modeling itself as an organism that it becomes what we call “self-aware”.

You’re stealing concepts that are not derivable under materialism to make it seem as if materialism can provide what non-materialism provides – meaningful distinctions between “I”, “will”, “choice” “error”, “truth”, that prevent self-referential incoherence.

You use those words to make it seem as if there is a fundamental difference between what you say, and decide, and do, and what a rock does rolling down a hill, what sounds it makes and what path it happens to take. There is no fundamental difference. It’s just physics doing whatever it does. There is no “erroneous” path down the hill. There is no erroneous sound the rock makes. There are no erroneous words I can speak under command of physics – no “false” beliefs or wrong conclusions.

Those terms require that something exists by which they can be arbited beyond that which is making the claim and computing the answer in the first place. You cannot calibrate or judge a ruler with the same ruler, and under materialism that is all there is – a ruler that equally produces sage, saint, madman, despot, true, false, error, morality, immorality. “You” cannot “collect data” from “outside the system” in order to check the system, because “you” are the system, you **are** the collection and interpretation process, and you **are** the system that produced the potential error in the first place.

There is no premised or possible escape from the total system bias of the computation.

Say, William — will you wake us up when you have finished with beating this strawman?

That’s all an organism is – a computation of physics. You’re unable or unwilling to accept the necessary ramification of materialism by falsely creating a distinction between “you” and the computation. Under materialism, that is all “you” are – the computation of physics, because nothing else exists.

Or, are you saying that an “organism” is something other than a computation of physics?

Well, I’m not the only one to keep asking you: what is the difference? If the result is the same with and without a LFW module, what does the LFW module actually do? And with what information does it do it? You keep evading that question?

My will, morality and “I” seem identical to yours except that yours has some non-material something that doesn’t seem to have any effects. What is the extra something that distinguishes “mere” material “I”, “will” and “morality” and the non-materialist version of the same concepts?

Well, what is a decision under non-materialism? In what sense is the decision-like output of a computation (“this brain is probably the brain of a person with schizophrenia”) not a Real Decision, but the output of a psychiatrist coming to the same conclusion, a Real Decision?

The sentence is meaningless! “Physics” is a scientific discipline. “Errors” are made by decision-makers. The question is whether a material decision-maker can make errors. They certainly can. Except you deny that there is such a thing as a material decision-maker….

OK, we are clearly barking up completely different trees here. Can we take a concrete example?

Let’s take my machine learning example. On the one hand we have a physical system that takes in input, and outputs a treatment recommendation – makes a decision in normal English usage. On the other hand we have a clinician who outputs the same treatment recommendation. Subsequent events will confirm whether the treatment was effective or not.

Are you saying that the machine is not making a decision but the clinician is? Can we stick with that example before we get on to what views pecans hold about maples?

Again, you mistake the map for the territory. Just because the territory can be mapped on paper doesn’t mean the territory is “only” paper “under” mapping.

It relies on fitting models to data, and both are readily available to materialists and non-materialists alike.

Right, well, I don’t understand what you mean by “computation” then. What do you mean when you say an organism is “a computation of physics”? I’d say an organism was a physical object, which carries out processes, computations being one of those processes, at least in some sense, for most organisms.

That doesn’t make an organism = a computation. What something is isn’t necessarily the same as what something does.

OK, William, you really need to provide your working definition of “computation”. I think you are using it in a very different way to everyone else. I’m taking it to mean: a process that outputs a solution to some problem.

The solution can be an action, or a conclusion.

Sure it is. I mean, you could argue (and indeed I do) that evolutionary processes are a computational, the output being a series of “solutions” to the problem of how to reproduce/persist in an environment full of resources and threats, but that wouldn’t be all an organism is.

So if you are asking me whether I think that an organism is the output of a computational process, yes, I’d say it is. But that isn’t the same as saying that an organisms *is* a computation.

Or that “the output of a computational process” is an adequate description of an organism. Obviously it isn’t – many outputs of computational processes are not organisms.

Nor is it, itself, a “computation”. As I said, an organism may carry out computations, but that’s not the same as saying it *is* a computation.

So no, I don’t think an organism *is* a computation. I think it’s a thing, with properties, one of which is to compute – produce solutions to problems.

Note how you use the term “result”. What result? What kind of result? The philosophical result couldn’t be any more different – computed outcomes one is incapable of changing (because they **are** the computed outcome), vs a free will capacity to supercede all such material computations.

The projected actual result between a computation confined to locally available processes, parameters, information and computed goals couldn’t be any more different from a result not confined to such limitations and parameters.

The only “result” you could be referring to here would be “how would humans experience the distinction”, **as if** what they are experiencing now could be drawn from an actual materialist reality, and then demanding me to answer how a non-materialist, LFW experience would be different from what you contextually assume can be a materialist experience.

Even if I agree arguendo that there is no experiential difference, there would be a huge actual and a huge philosophical difference in what the two systems meant and could deliver.

They couldn’t be any more different. You, your will, and your morality are nothing but computations and if you have garbage in, there will be garbage outputted. My system has the capacity to recognize garbage by comparing it to or by analyzing it via an absolute standard, and reprogramming the system from outside of it, and by finding or inventing informtion not available to the computation.

For a computation, if the computation says torturing children is moral, then it is moral. For LFW, everything claimed to be moral can be held against a presumed absolute standard, and the computation corrected by an outside agent (self as free will) with a means to reprogram erroneous processes. That is not available to the materialist scenario.

I’ve already provided it.

Result=the action taken or the conclusion drawn.

Look at my diagram.

Specifically, look at the first, and compare it with the second. Tell me what the LFW module does that is not already done by the “brain/body decision-maker”, and how the final output (do it vs don’t do it) is affected. That

I’ve bolded the begged question (ignoring the fact that yet again you have confused the map for the territory – the model is not the modelled). Yes, indeed, without the LBW module, the decision-making has ended one stage earlier. You seem to call the LBW module “one”, here. I’m asking what difference the “one” makes. Sure, once the decision to act is made, it may not be revocable (although many decisions are), but it’s not true to say that the decision that is output from the brain/body decision-maker module is permanent. So adding the LBW/one module doesn’t alter whether the decision is permanent or not, or even changeable. Subtracting it just means that if a decision is going to be changed, it has to be changed by the brain/body decision-maker module, not the LBW/one module (which we’ve just subtracted).

So what, philosophically, is the difference?

OK, in that case tell me what else is available to the LFW/one module, not available to the brain/body decision-maker module.

The “result” I’m referring to is the action executed or conclusion drawn. But equally, I’d like to know what you think it would be like to have an LFW, as opposed to simply believing I had an LFW but not having one, or, indeed, not believing I have LFW but being quite happy to consider myself as consisting of the brain/body decision-maker module aka the organism called Lizzie.

OK, that’s what I want to know. What can one system deliver that the other can’t? And what additional resources can it access in order to deliver it?

So, all you were doing when you said that Liz is not a computation, but rather an organism computing things, was being disingenuous?

If the organism is a computation of physics, and all it does is compute via physics, then all Liz is, ultimately, is a computation of physics.

What you need to admit, Liz, is that under materialism, all you are is a computation of physics. Everything you say, think, believe, conclude – it’s a computation of physics. Nothing more, nothing less. “Liz” is not a separate thing “doing” the computing. “Liz” is not “in control” of the computing. “Liz” is not an external or free entity that “directs” the computing. Liz is, and Liz does, and Liz thinks, whatever physics compels – right, wrong, intelligent, stupid, wise, nonsensical, reasonable, deranged – whatever; and all of those things are nothing more than morphological and chemical variances that differ from one idiosyncratic physical entity to another.

Whatever “Liz” thinks about her thoughts, and about her beliefs, however “Liz” feels about the views of others, are just the results of physics processing non-linear, unpredictable, chaotic influences that “Liz” has no control **over** because “Liz” is the product of those things. If physics commands Liz to bark like a dog and believe she has made a sound argument, that is what will occur. If physics commands Liz to believe she has gathered up evidence and information and scientific data that supports her view, when all she has actually done is sniff some marigolds and chewed on an old tennis shoe, that is what Liz will think and believe.

If physics commands Liz to believe she has error-checked her thoughts and beliefs and come away satisfied, when all Liz has actually done is watch a Three Stooges movie, that is what will occur, and that is what Liz will think.

Computations cannot do, think, say, believe, consider or conclude anything other than what physics commands. Period. And physics isn’t interested in truth, or reason, or valid conclusions, or proper premises, or coherent worldviews: physics just produces whatever the heck physics happens to produce.

I’ve done this several times in this thread alone. There’s no reason to do it again.

It can access everything outside of the limitations of the computation; it can rewrite the computation, redirect it outside of the capacity of the computation; it can suspend the computation; it can generate new data, new information unavailable to the computation; it can shut down that computation and write another one; it can operate from self-directed, demiurge faith unavailable as a computed process.

Under materialism, all individual computations are limited in what and how they process, how and what data is collected and interpreted via an idiosyncratic (biased) processs, and what kind of “reprogramming” can occur – what can be extrapolated via the normal sequences of physics.

Under materialism, there is no available absolute standard by which to error-correct or discern true statements from false. Under materialism, there is no capacity for the individual to will itself to be or become something other than what the computation dictates, or to control or command the system in any way other than what the system dictates.

OK, William, let’s take a (hypothetical) God’s Eye view here (stolen concept indeed, but let it pass for now….)

On earth are two organisms (well a great many in fact but two in particular). One is referred to by other organisms as William, and the other as Lizzie.

Lizzie refers to William as William, but to her self as “I” because that’s how things are done around here. Similarly, William refers to Lizzie as Lizzie (well, Liz, but no matter, Lizzie knows who he means), but to himself as “I”.

Lizzie and William both know, however, that they are exemplars of the kind of organism called “human”, and they both know the names by which others call them. In other words, they can model a world that contains humans like them, and including them, with names like Lizzie, William, Neil, Petrushka, and so on.

And they know that these organisms, humans, do things, decide things, conclude things, can be ordered to do things, can order each other to do things, can organise groups of people into doing things etc. In other words, that they are fairly autonomous agents – that it is reasonable to say: William stole the cookies because he was hungry. Or even that Lizzie gave William cookies because he was hungry. Or that William talked Lizzie into giving him cookies, because he was hungry.

Add that knowledge to the knowledge that they themselves are exemplars of humans, and we have Lizzie modeling herself as an autonomous agent. But because Lizzie also knows that while she has a small amount of control over the actions and decisions of other humans (she can persuade them give her cookies), she has a much larger amount of control over her own actions and decisions.

In other words, Lizzie-the-organism models Lizzie-the-organism differently from the way she models other organisms. Lizzie-the-organism, to Lizzie-the-organism, is “the human organism Lizzie-the-organism can control a gazillion times better than Lizzie-the-organism can control any other organism”.

And she expresses this special status of Lizzie-the-organism from the point of view of Lizzie-the-organism by referring to Lizzie-the-organism as “I” – which is unambiguous, because all the human organisms know that when a human organism uses the word “I” they mean the organism doing the speaking.

So “I” doesn’t mean “the model Lizzie-the-organism makes of Lizzie-the-organism”. What it means is “what Lizzie-the-organism is modelling when she models Lizzie-the-organism as the human organism over which she has a gazillion times more control than over any other organism.”

And each human organism has a comparable perspective – that there is one human organism over which they have a gazillion times more control than any other human organism.

And from the God’s Eye perspective, there are lots and lots of autonomous organisms running around, making decisions, interacting, influencing each other’s actions, controlling to some extent each other’s actions, but primarily acting autonomously – constrained, but not dictated, by other humans and physical limitations.

They are, in other words, self-efficacious. Some of them describe this as having “free will”. Others merely say that the accept responsibility for their action. But none is “stealing” a concept when they refer to themselves as “I” and assert ownership of their own actions. They are doing no more than when they parse the world into autonomous causal agents of which they are one.

If you have, either you have misunderstood my request, or I haven’t recognised it as a response.

Please provide a link to somewhere where you have provided it. Or repeat it.

You are the one confusing the map for the territory, not me. You are using a map that cannot correspond to the territory (materialism as actually existent) you insist exists, because the map uses terms and concepts unavailable to the territory presumed to actually exist under materialism.

A materialist insists a flat territory actually exists – everything is the product of the computation of physics. That territory is fundamentally flat, because everything is the same essential thing, even if there are variations of color that can be used to differentiate one area from the other based on the colors.

Then you take a non-materialist map where the color variations conceptually, symbolically mean a mountain exists in that spot, or a valley exists in that spot, and use those terms – “mountain”, “valley” to label the color variations of your flat terrain that correspond to the color variations on the topographical map of non-materialists.

You say – see, this area here is a mountain, and this, over here, a valley – but, when you say “mountain” or “valley”, all you are conceptualizing is a different colored area that is as flat as everything else. When the non-materialist conceptualizes “mountain” and “valley”, the are at a fundamental variance to a “flat” terrain. The colors mean something other than just a color variation on the terrain.

But that is all “self”, “I”, moral, immoral, right, wrong, true, false, will, coercion, reason, madness, Dahmer, Gandhi can be under materialism: different color variations of the same flat topology – appearances of physics as it computes whatever it computes.

Under non-materialism, those variations are not superficial, variant colors of ***the same thing***; they are entirely different kinds of things, with entirely different fundamental properties.

Materialists are the ones mistaking the map for the terrain, not non-materialists. We hold that the terrain is not flat like the map, with different colors on it, but rather that the colors and markings **represent** things that are entirely different that is unavailable to being expressed via a flat map.

Under materialism, “I” is just a part of a flat terrain with a different color. That different color is not a “code” that means that the represented “I” is something different; the “I” really is just a local color of a flat terrain. “Good” is just a local color. True, false, will, choice – all just local colors, not “code” for “something else”.

But that is how you use those terms – you use those terms as if they were code for “something other” than “computation of physics”; it cannot be, under materialism. You only have a flat map with colors on it. Those colors do not symbolically represent anything; under materialism, they are just different colors on the flat terrain.

Well, I want to know what those things are.

So can the brain-body decision-making module. That’s the point of the feedback loop – the part that makes it “chaotic” and non-linear. The output can be: “rewrite the computation”. In fact that’s exactly how it appears to work.

such as?

so can the brain-body decision-maker module. And does.

Well, that’s an oxymoron. Data are “what is given”. “Generating data” is at best loose. If you mean “collect data”, then the brain-body decision-maker module can, and does, do that. Most often the output is collect more data. E.g. move your eyes,; or continue listening.

What information unavailable to the computation?

So can the brain-body decision-maker module, and does.

wut?

Yes, but not nearly as limited as you seem to think. Nothing, apart from that demiurge thing, is not possible to the brain-body-decision-maker module, which has all the data-collection tools and program-writing/suspending/clearing tools you need to do what you seem to think the LFW module must do, while the LFW module has, apparently, no such tools.

If it has, where/what are they?

Maybe not “absolute” (there is always measurement error) but the standard by which we correct errors is provided by new data. If we make an error, the result will not be what we expect. Oddly enough, the brain’s error-correcting mechanisms is fairly central to my own research.

Only if you insist on separating “the individual” from the brain-body decision-making module. My point is that that separation is neither warranted nor sensible.

There’s nothing left for the disembodied LFW to do. At least you haven’t named one yet (apart from the demiurge).

“We are physical systems” does not entail “We are just physical systems.”

You miss the point entirely. Even if the computation can do some rewriting of its code, it can only rewrite the code as the computation dictates. LFW can rewrite the code beyond and outside of what the computation dictates, unconstrained by the parameters of the computation.

So you keep asserting. I wish you’d actually read my posts. At least provide a counter-rebuttal rather than the repeat same assertion I’ve just attempted to rebut!

I do not recognise this description of what I “insist”.

I’m sorry, this metaphor does nothing for me at all. I honestly don’t know what you are talking about.

I’ll try to eschew the map metaphor myself:

When I say you are mistaking the map for the territory, what I mean is that you appear to think that I am saying:

“I” am merely the output of a computation (or possibly “a computation”).

I am not.

I am saying that what I refer to as “I”, is Lizzie-the-organism, who is not a computation, but can compute. And one of the things Lizzie-the-organism computes is a model of Lizzie-the-organism.

Now, that model is a computation, or rather the output of one. Moreoever, it is the output of a computation conducted by Lizzie-the-organism.

But when I refer to “I”, I am not referring to the model, I am referring to what the model is modelling, namely Lizzie-the-organism.

You are mistaking my model of Lizzie-the-organism (“map”) for Lizzie-the-organism herself (territory).

I am not saying I am the result of my own computation. I’m saying that I compute a model of myself.

There is a difference.

I hope you are remembering that it can collect more data.

How does it do this?

This is what I keep asking.

What does the LFW have access to that the brain-body-decision-maker module does not? You keep saying it has additional resources, but you don’t say what they are.

You are stealing concepts throughout this response, as if “we” and “standard” and “correct” and “errors” and “new data” and “result” and “the brain” and “mechanism” and “my” and “research” and beliefs about those things and conclusions are something other than what creates the error in the first place. You are correcting a ruler you deem erroneous by appealing to correction by the same mechanism that produced the error in the first place.

You have nothing but “the computation” to appeal to in any and every case. All of those terms, under materialism, are just different colors of local areas of “the computation”. Here’s the corrected version of what you said:

You are using terminology from a map that does not apply to the terrain you insist exists, and that terminology hides the self-referential nonsense that would ensue if you were actually forced to use terms that fairly corresponded to the terrain you insist actually exists.

William, re-reading your extended metaphor, and trying to make sense of it…

Is it possible that you think that “under materialism” nothing exists except fundamental particles?

And that all our parsing of the world into higher-level objects and systems with properties must therefore be “borrowed” from non-materialism?

If so, I begin to see the problem….

No, William. You seem to have the wrong end of my stick completely.

Could you read the post below, and tell me whether it’s what you think?

Also it would be good if you could provide the definition of “computation” you are using.

It doesn’t matter how much data a physical system can collect, the amount and kind of data any particular physical system can collect, and how it is interpreted and applied, is entirely constrained by the nature of that particular idiosyncratic limited system. LFW has no such parameters.

No, and this is 100% irrelevant to the debate at hand.

OK, in that case I have no clue what your colour computation metaphor was about then.

Can you give your definition computation, then? Because without that, I’m not easily making sense of what you are saying.

Could you address the other half of my post, then, please?

Computation = physics (as lawful patterns and/or random influences/events) causes everything that occurs, whether or not that computation includes deterministic, chaotic, non-linear or unpredictable results; determine, as in the ongoing process of physics determines (even if unpredictable) what occurs next in every case, including what one “wills”.

Physics computes (determines) who and what “Liz” is, what Liz believes, and all choices Liz makes, even if that computation includes non-linear, chaotic, and non-predictable events/outcomes. What Liz beliefs is a computation of physics and nothing else. If the physics commands Liz to bark like a dog and consider it wisdom, that is what Liz will do and believe.

If the path towards that barking, and that belief, necessarily requires a butterfly flap it’s wings on Tuesday in Brazil, and requires Liz to eat a pizza the night before, where that act and belief will not occur otherwise, then it can be correctly stated that butterfly wind and pizza were the necessary causes of Liz barking like a dog and believing it to be wisdom – and there is no way for Liz to escape that conundrum, because nothing exists outside of the process of physics to change it.

Unavailable to Liz is the choice to defy what physics commands her to believe or think or conclude, even if those beliefs and thoughts an conclusions are nonsensical. This makes Liz the computation of physics – nothing more, nothing less, no escape, no appeal.

Irrelevant. It is presumed to be able to. It is not material, so it would not be bound to physical limitations of time or space, nor physical explanations. That opens its options up considerably over anything available to the local biological automaton.

Everything that the biological automaton does not. It is immaterial, so it is not confined to the physical, programmed limitations of the biological automaton. If the biological automaton is physically programmed to not be able to understand X, LFW can understand it. If the BA is programmed to not be able to see X data, LFW can see it. If the BA can only reach X kinds of choices, LFW can choose Y. Whatever is not available to that particular BA at that particular time and location due to it’s materially limited nature is available to the LFW.

OK, well no wonder we have been not communication. That’s not what I mean by “computation” at all. I actually gave me definition – why didn’t you point out that it was radically different from yours?

And why use the word “computation” if all you mean is “physics”, by which you seem to mean the physical properties of the universe?

Oh, well, I guess we’d better retrace.

The box on my diagram marked “brain/body decision-making module” is, in my view, what constitutes “me”. You seem to think that “me” consists of an additional module that I have called the LFW module.

I have argued that the brain/body decision-making module is capable of anything your LFW module can do and has the tools to do it.

Can you explain what additional resources the LFW module has access to that the brain/body decision-making module does not? In the purple box?

Because I’m not seeing any. If you’ve answered this already, please repeat or link to your answer because it’s possible I’ve misunderstood an answer you consider you’ve given but which did not strike me as an answer.

ETA: I see you’ve addressed this above. Hang on.

Do you have any evidence that this immaterial, magical thing actually exists, or is that impossible to demonstrate even in principle?

Well I don’t share your presumption 🙂

So your hypothesis is that the LFW is an entity separate from the body, that has access to information the body has no access to, and computational resources that are beyond the brain-body’s capacity, and can improve on decisions (or “decisions”) output by the brain-body?

Okaaay….

So, what evidence do you have to support this hypothesis?

It’s certainly not irrelevant, or why would you have posted it?

Let me try again, then:

Are you saying that for you, reality consists of platonic forms of things, like mountains, William, Lizzie, will, etc, but for materialists everything is just so much undifferentiated physical interactions, and that by borrowing your model of forms we are “stealing” the concept and plotting these things on our “flat” undifferentiated map?