As The Ghost In The Machine thread is getting rather long, but no less interesting, I thought I’d start another one here, specifically on the issue of Libertarian Free Will.

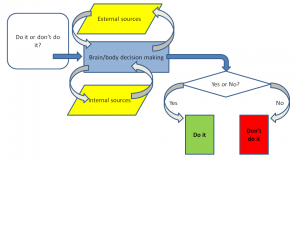

And I drew some diagrams which seem to me to represent the issues. Here is a straightforward account of how I-as-organism make a decision as to whether to do, or not do, something (round up or round down when calculating the tip I leave in a restaurant, for instance).

My brain/body decision-making apparatus interrogates both itself, internally, and the external world, iteratively, eventually coming to a Yes or NO decision. If it outputs Yes, I do it; if it outputs No, I don’t.

Now let’s add a Libertarian Free Will Module (LFW) to the diagram:

There are other potential places to put the LFW module, but this is the place most often suggested – between the decision-to-act and the execution. It’s like an Upper House, or Revising Chamber (Senate, Lords, whatever) that takes the Recommendation from the brain, and either accepts it, or overturns it. If it accepts a Yes, or rejects a No, I do the thing; if it rejects a Yes or accepts a No, I don’t.

The big question that arises is: on the basis of what information or principle does LFW decide whether to accept or reject the Recommendation? What, in other words, is in the purple parallelogram below?

If the input is some uncaused quantum event, then we can say that the output from the LFW module is uncaused, but also unwilled. However, if the input is more data, then the output is caused and (arguably) willed. If it is a mixture, to the extent that it depends on data, it is willed, and to the extent that it depends on quantum events, it is uncaused, but there isn’t any partition of the output that is both willed and uncaused.

If the input is some uncaused quantum event, then we can say that the output from the LFW module is uncaused, but also unwilled. However, if the input is more data, then the output is caused and (arguably) willed. If it is a mixture, to the extent that it depends on data, it is willed, and to the extent that it depends on quantum events, it is uncaused, but there isn’t any partition of the output that is both willed and uncaused.

It seems to me.

Given that an LFW module is an attractive concept, as it appears to contain the “I” that we want take ownership of our decisions, it is a bit of a facer to have to accept that it makes no sense (and I honestly think it doesn’t, sadly). One response is to take the bleak view that we have “no free will”, merely the “illusion” of it – we think we have an LFW module, and it’s quite a healthy illusion to maintain, but in the end we have to accept that it is a useful fiction, but ultimately meaningless.

Another response is to take the “compatibilist” approach (I really don’t like the term, because it’s not so much that I think Free Will is “compatible” with not having an LFW module, so much as I think that the LFW module is not compatible with coherence, so that if we are going to consider Free Will at all, we need to consider it within a framework that omits the LFW module.

Which I think is perfectly straightforward.

It all depends, it seems to me, on what we are referring to when we use the word “I” (or “you”, or “he”, or “she” or even “it”, though I’m not sure my neutered Tom has FW.

If we say: “I” is the thing that sits inside the LFW, and outputs the decision, and if we moreover conclude, as I think we must, that the LFW is a nonsense, a married bachelor, a square circle, then is there anywhere else we can identify as “I”?

Yes, I think there is. I think Dennett essentially has it right. Instead of locating the “I” in an at best illusory LFW:

we keep things simple and locate the “I” within the organism itself:

And, as Dennett says, we can draw it rather small, and omit from it responsibility for collecting certain kinds of external data, or from generating certain kinds of internal data (for example, we generally hold someone “not responsible” if they are psychotic, attribution the internal data to their “illness” rather than “them”), or we can draw it large, in which case we accept a greater degree (or assign a greater degree, if we are talking about someone else) of moral responsibility for our actions.

In other words, as Dennett has it, defining the the self (what constitutes the “I”) is the very act of accepting moral responsiblity. The more responsibility we accept, the larger we draw the boundaries of our own “I”. The less we accept, the smaller “I” become. If I reject all moral responsibility for my actions, I no longer merit any freedom in society, and can have no grouse if you decide to lock me up in a secure institution lest the zombie Lizzie goes on a rampage doing whatever her inputs tell her to do.

It’s not Libertarian Free Will, but what I suggest we do when we accept ownership of our actions is to define ourselves as owners of the degrees of freedom offered by our capacity to make informed choices – informed both by external data, and by our own goals and capacities and values.

Which is far better than either an illusion or defining ourselves out of existence as free agents.

Given her expertise in the field, perhaps Liz can explain something that has always perplexed me, even when I was a materialist atheist.

As infants grow into adults, they learn to do all sorts of things with their body. Some of this, I would imagine, can be explained as instinctual, but it seems to me that most of it is in response to observing other people doing things. We see and hear them do things – walk, dance, play a musical instrument, speak, jump, etc. We call this learning, but I don’t understand how – from a materialist perspective – this kind of “learning” takes place.

When a baby or child observes anyone doing anything, all they are seeing is the conclusion of a massive amount of unseen physiological operations. When we say, “raise your hand” or “jump” or “spin”, all of those are just symbolic terms that represent an unimaginable sequence of integrated firings of synapses and nerve endings into muscle and coordinated muscle activations and interctions – none of which gets explained to the baby or child because the adult has no idea how to explain it. All the adult can do is lift their arm say “lift your arm”, or whatever, and expect the baby or child to do the same thing.

But, how is that possible? Neither has any comprehension of how any of it actually works; but just by seeing it, the child can “will” it, and begin refining the capacity to mimick their parent. What the child – or even the adult – experiences is nothing but a rather vague willful push that has absolutely no operational (at the neuron and muscle level) specificity whatsoever.

And then, by continuing this vague “willful” push, incredible refinements to the precise orchestration of one’s limbs and digits is possible – in fact, people can push themselves to do things that haven’t been done before, with zero understanding of the actual physiological mechanics involved, without any understanding of how their “willful push” connects to, activates or controls any physiological sequences.

How can a child that has no functional knowledge whatsoever of physiology simply vaguely apply an inarticulate “willful push” (that hasn’t even been explained to it or connected to anything) to mimic an act, or to just try and get to an object it wants, generate all of the correct functional physiological sequences necessary to accomplish the new motion or act?

I’ve never understood how vague, inarticulate will can operate what is, for all intents and purposes, the most sophisticated, computerized piece of machinery in existence, with absolute no understanding whatsoever of how it works or how it makes it work, and through that same will refine the operation of that device into accomplishing spectacularly precise exhibitions of dexterity, gymnastics, athletics, music, dance, etc.

The statement “LFW is logically incoherent” necessarily relies upon the premises of LFW in order to have any value. “It cannot exist” does the same thing.

For the statement “LFW is logically incoherent” to have any value other than rhetoric, logic must not only be an absolute, there must also be autonomous agencies capable of apply logic beyond the dictates of physics. If logic is just whatever any particular biological automaton holds it to be, then all keiths can mean here is “I personally reject the idea of LFW”.

That is all “LFW is logically incoherent” can mean under materialism: “I personally reject the idea of LFW,” because all logic and reason are just personal (local) computations of physics. If another local computation of physics produces the sensation and statement “Non-LFW is logically incoherent”, it is necessarily just as valid as the first statement, because there is no exterior or absolute means of arbitration between the two statements.

“It cannot exist”, under materialism, = “I reject that it exists”. Nothing more.

There is no evidence of such absolute standards.

In some cultures, FGM (female genital mutilation) is considered good, and in others it is considered bad. For the Brits, driving on the left side of the road is good, while that would be bad in the USA. Newton gave us a standard for determining the gravitational force that keeps a planet in its orbit, while Einstein gave us a different standard whereby there is no such gravitation force and instead the planet maintains its orbit because of the curvature of space-time.

We see to manage without absolute standards.

I never claimed there was evidence for them. I claimed that arguments cannot be made without their assumption, whether the person making the argument realizes it or not – just as you statement above assumes an absolute standard of “evidence”, or else your claim would have no presumed value other than as emotional rhetoric.

We cannot manage without assuming they exist, whether or not they actually exist. Otherwise, you’d be able to make an argument without assuming that I should reach the same conclusions, by the same process, as you.

Those standards are the definitions and axioms assumed in the computation. They are not absolute standards. The definitions and axioms for modular arithmetic are different from those of ordinary arithmetic.

It is part of our nature that we establish standards and follow them. You can probably find the same establishing and following standards in other biological organism (birds marking out and defending territory, for example).

This is nonsense. There is no “language of materialism” and they do not use “LFW terminology”. They communicate in natural language, which is a language of intentionality. And even the word “material” reflects human intentions on what we consider to be material.

More nonsense.

I used the ordinary word “evidence” as understood in normal English communication. I did not assume an absolute standard for “evidence”. I merely assumed sufficient agreement to allow communication. The meanings of ordinary words are inherently subjective. They do not depend on absolute standards. We depend only on evolving norms, not on anything absolute.

I hesitate to respond, since your posts have become endless repetition of this same basic thesis – ‘materialists’ (who naturally do not consider that anything exists outside the realms of the ‘physical’) cannot hold a legitimate debating position on any matter (“whether they themselves realise it or not”), because without something existing outside the physical, there can be no ‘absolute’ standard for [insert chosen concept here from morality, evidence, truth, necessity, consistency, falsehood etc].

If you had access to this arbiter, and could rely upon your perception of its conclusions, you might have a case, but (“whether you yourself realise it or not”) you are as clueless as the rest of us. It is no less valid for the materialist to make statements like “that is incorrect, faulty, imperfect etc”. You don’t go “I think that might be incorrect – I’ll just check with the Independent Arbiter. Yep, he says it’s bollocks too”. You just call upon high-falutin’ backup for your own opinions. All this about “doesn’t have to be true; it’s necessary to act as if it is true” is just (word) salad dressing.

It’s only necessary to act as if it’s true if there is an arbiter, because only then could the statement “without an arbiter there is no absolute truth” be absolutely true. But if there isn’t one, there really isn’t. I would consider that an ‘absolute truth’ too.

Both Neil’s and Alan’s responses above fall prey to the same recurring use implications that can only come from LFW premises, without which they become nothing more tha subjective, materialist rhetoric – essentially, the maple leaf finding fault with the fig leaf’s physiology by comparing it against its own physiology.

Neil appeals to “standard” or consensus definitions, et. al., but fails to explain how his personal, idiosyncratic interpretation of such should be taken as anything more meaningful or significant that my views or definitions, when there is nothing to arbit our disagreement by other than the very ruler which he or I is using that found disagreement in the first place.

Alan is once again mistaking an argument of philosophy for an argument of fact.

Then we do not have sufficient agreement to allow for communication, because I reject your particular, idiosyncratic interpretation of any supposed “evolving norms” as having any meaningful merit by which for me to arbit my views, or any significance by which I should arbit my views.

Nonsense. If there is an X (an actual state-of-affairs in the world), this set of philosophical considerations flow. If there is not, that set. The entirety (given that we don’t know the actual atate-of-affairs) is itself also philosophical.

Philosophy and facts are somewhat intertwined.

I think it pretty obvious that I was fully cognisant of your belief that your conclusions are philosophical (not dependent on the actual state-of-affairs) when I said this:

“It’s only necessary to act as if it’s true if there is an arbiter, because only then could the statement “without an arbiter there is no absolute truth” be absolutely true. But if there isn’t one, there really isn’t. I would consider that an ‘absolute truth’ too. ”

That too is a philosophical reflection, directly related to the ‘what-if’s of the case you are presenting. I merely disagree with both your premise and your conclusion. You can’t understand that, I must choke back my sobs and soldier on regardless. My tea is ready.

Then why are you wasting your time posting here (or anywhere else, for that matter)?

Anyone following the discussion can decide for themselves. There’s no “should be taken” to it. I don’t control what or how others think. There is no magical arbiter to which we can appeal. People disagree a lot. If there were an arbiter to whom we could appeal, there would be no need for these debates.

You seem to have forgotten that I do not operate under the materialist paradigm where our two idiosyncratic states of physics can have insurmountable programming variances.

I don’t operate under your strawman “materialist paradigm” either. It is a simple observation, available to all who are not blinded by ideology, that meanings are subjective, that they vary from person to person and from circumstance to circumstance, and that argument involves some degree of give and take (“the principle of charity”) to allow for this variation in meaning.

I think you are addressing Allan but I also am aware that you -I was going to say believe but perhaps that’s too strong for what you write- think you are making an argument from some objective premise not based on reality, fact or shared experience. Why you feel you need to do this escapes me but it is utterly pointless and unconvincing.

Is WJM not saying that by his own norms, axioms, definitions, what-have-yous, he would be wrong ever to admit that he was wrong?

And yet, you keep framing your responses in terms of LFW, as if people are “free” if not “blinded by ideology”, and that the only people who disagree with you are so blinded – as if free will, autonomous entities can escape their physically determined conclusions and compare what you say against an absolute standard via an absolute arbiter.

OK, that’s certainly my field. I’d love to do it a bit more justice in a separate thread, but that might have to wait.

A lot of imitative behaviour seems to be hard-wired. One trick you can do with a very small baby is stick your tongue out at it 🙂 It will often stick its tongue out back, even though at that age it doesn’t know what a tongue is, or even that the thing it has in its mouth is the same class of thing that you have in yours. And of course many other animals, especially birds, do the same kind of thing. Bird song in many species is learned from parents, but some baby birds only learn the song of their own species, even though they don’t it unless exposed to it.

Well, there is plenty of neural and muscle specificity, but not much conscious intention to imitate seems to be required. “Mirror neurons” are part of the answer. The dopamine system is another.

Well, as I said, these are very good questions, and there is a huge and interesting literature on the subject. I only deal with a small part of it (though I did my PhD in a motor control lab). But it was one of the reasons I came into the field, being fascinated specifically in how people learn musical skills (having acquired and taught them myself for much of my life).

I’ll try to dig up some papers.

Cheers

Lizzie

I’m not sure of the point here. I have long said that we have free will, and that physical determinism is probably false or incoherent anyway. Whether that’s the same as LFW, I cannot tell. I have never been able to make sense of LFW.

William,

No. To argue with you, I only need to believe that we share some ideas in common about what is rational. Those ideas need not be absolute or certain, though we hope they’re correct, of course.

As I said above, I am merely proceeding on the hope that we (and our audience) share ideas in common about what constitutes a rational and persuasive argument. We hold these ideas because we think they are likely to be valid and true, but we need not be certain of them, and we definitely don’t need to assume that we have AA2AA — “autonomous access to an absolute arbiter of truth”.

No, keiths. To argue with me, all you require is that your computation directs you to, for whatever non-linear, chaotic, unpredictable causal reason, which can include butterfly wind and pizza. Nothing else whatsoever is required – no beliefs, no shared ideas, no ideas about what is rational.

IOW, computation A outputs toward computation B because of whatever unpredictable causes that may have contributed in the non-linear process, and outputs whatever the computation commands, without respect to your list of “requirements”, as if you can predict what the computation should do and what material it should act upon.

You’re stealing concepts with every post.

WJM, posting at UD:

No, William, you have not been making that case. You have made that assertion, but you have utterly failed to make a case in support of that assertion.

How about a general answer?

Logically, I don’t see how vague, inarticulate will can be expected to properly operate the complex, unseen physicodynamic processes necessary to accomplish these kinds of tasks. How is it that I can simply impute vague will – which is basically the same towards any function and any desired end – and my body somehow can interpret that vague impulse of inarticulate, uninformed (about anything actually going on in the body) into all of the precise, necessary chemical interactions necessary to acquire the target activity?

I intended to come back and comment on this. Better late than never.

There are two reasons that you find it hard to understand this:

1: You make the mistaken assumption that we are computers. We aren’t.

2: Your understanding of computers is already simplistic.

The captain of the rowing team does not need to know the details of how each crew member rows. He just calls out “pull”, and they all pull together on their oars. What the captain needs to do, is perceive how well they are doing, so that he can ask the crew to pull harder or to ease off. The captain manages the feedback. The details of coordinated physiological operations are left for the crew to coordinate.

The child never needs to learn the physiological operations for playing a piano. What the child needs is to be able to tell whether he is doing it correctly. He/she must learn to perceive what the fingers are doing, partly with visual perception and partly with proprioception. The coordinated physiological operations can be left to the neurons and the muscles that the neurons control. No doubt, with practice, the neurons and muscles will get better. But the child mainly needs to have strong self-perceptions so as to provide the feedback. Learning is mostly perceptual learning. The details of the physiological operations will take care of themselves, as long as the self-perception is there to provide the needed feedback.

OK, I’ll give it a go 🙂

Because it isn’t vague. If I want a drink of beer, that is quite specific. What then happens is highly complex (I need to go to the cupboard, find a glass, find the beer opener, open it, pour it without spilling, or letting the head foam over, bring it back to my desk, then repeatedly lift it to my mouth, precisely calibrating the amount of effort I put into the lift to the amount left in the glass, so that I do not inadvertently throw it over my shoulder (as I once nearly did with a friend’s newborn, having become used to my own hefty 8 month lump, true story), or waste precious energy.

But that doesn’t make the top-level instruction “vague” – it just means that it sets of a cascade of decision-trees in my neural networks, each with their own role to play, and each competing with each other, the one whose pattern is most likely to deliver the beer at any given time tending to be reinforced (literally – with more neurons involved) and the loser being inhibited (literally – with Gabaergic inhibitory synapses repolarising the neurons). Some of this is learned by trial and error, the error signal being modulated by dopamine, but what is interesting is that we make “forward models” – the system makes a “guess” as it were, and then adjusts its next “guess” on the basis of how wrong the last guess was – when this goes wrong we end up with beer (or baby!) on the floor.