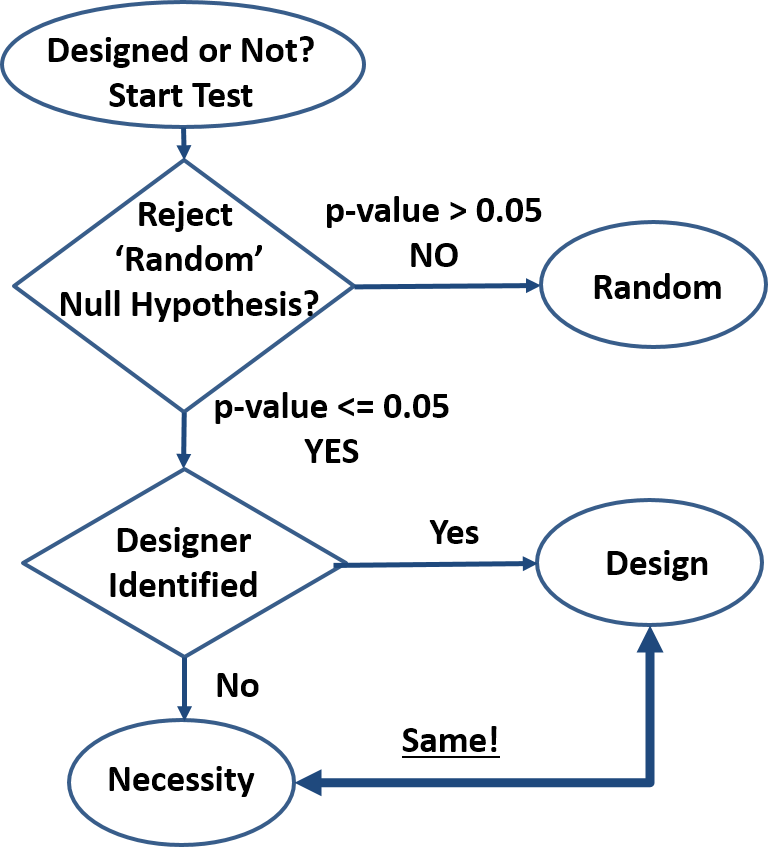

Design is order imposed on parts of a system. The system is designed even if the order created is minimal (e.g. smearing paint on cave walls) and even if it contains random subsystems. ‘Design’ is inferred only for those parts of the system that reveal the order imposed by the designer. For cave art, we can analyze the paint, the shape of the paint smear, the shape of the wall, composition of the wall, etc. Each one of these separate analyses may result in separate ‘designed’ or ‘not designed’ conclusions. The ‘design’-detection algorithm shown in the attached diagram can be employed to analyze any system desired.

Design is order imposed on parts of a system. The system is designed even if the order created is minimal (e.g. smearing paint on cave walls) and even if it contains random subsystems. ‘Design’ is inferred only for those parts of the system that reveal the order imposed by the designer. For cave art, we can analyze the paint, the shape of the paint smear, the shape of the wall, composition of the wall, etc. Each one of these separate analyses may result in separate ‘designed’ or ‘not designed’ conclusions. The ‘design’-detection algorithm shown in the attached diagram can be employed to analyze any system desired.

- How do we know something is not random? By rejecting the null hypothesis: “the order we see is just an artifact of randomness”. This method is well established and common in many fields of research (first decision block in diagram). If we search for extraterrestrial life, archeological artefacts, geologic events, organic traces, etc., we infer presence based on specific nonrandom patterns. Typical threshold (p-value) is 0.05 meaning “the outcome observed may be due to randomness with a 5% or less probability”. The actual threshold is not critical, as probabilities quickly get extreme. For instance, given a 10-bit outcome (10 coin toss set), the probability of that outcome being random yet matching a predetermined sequence is 0.1%, well below the 5% threshold. A quick glance at biological systems show extreme precision repeated over and over again and indicating essentially zero probability of system-level randomness. Kidneys and all other organs are not random, reproduction is not random, cell structure is not random, behavior is not random, etc.

- Is a nonrandom feature caused by design or by necessity? Once randomness has been excluded, the system analyzed must be either designed as in “created by an intelligent being”, or a product of necessity as in “dictated by the physical/scientific laws”. Currently (second decision block in diagram), a design inference is made when potential human/animal designers can be identified, and a ‘necessity’ inference is made in all other cases, even when there is no known necessity mechanism (no scientific laws responsible). This design detection method is circumstantial hence flawed, and may be improved only if a clearer distinction between design and necessity is possible. For instance, the DNA-to-Protein algorithm can be written into software that all would recognize as designed when presented under any other form than having been observed in a cell. But when revealed that this code has been discovered in a cell, dogmatic allegiances kick in and those so inclined start claiming that this code is not designed despite not being able to identify any alternative ‘necessity’ scenario.

- Design is just a set of ‘laws’, making the design-vs-necessity distinction impossible. Any design is defined by a set of rules (‘laws’) that the creator imposes on the creation. This is true for termite mounds, beaver dams, beehives, and human-anything from pencils to operating systems. Product specifications describe the rules the product must follow to be acceptable to customers, software is a set of behavior rules obeyed, and art is the sum of rules by which we can identify the artist, or at least the master’s style. When we reverse-engineer a product, we try to determine its rules – the same way we reverse-engineer nature to understand the scientific laws. And when new observations infirm the old product laws, we re-write them the same way we re-write the scientific laws when appropriate (e.g. Newton’s laws scope change). Design rules have the same exact properties as scientific laws with the arbitrary distinction that they are expected to be limited in space and time, whereas scientific laws are expected to be universal. For instance, to the laboratory animals, the human designed rules of the laboratory are no different than the scientific laws they experience. Being confined to their environment, they cannot verify the universality of the scientific laws, and neither can we since we are also confined in space and time for the foreseeable future.

- Necessity is Design to the best of our knowledge. We have seen how design creates necessity (a set of ‘laws’). We have never confirmed necessity without a designer. We have seen that the design-necessity distinction is currently arbitrarily based on the identification of a designer of a particular design and on the expectation of universality of the scientific laws (necessity). Finally, we can see that natural designs cannot be explained by the sum of the scientific laws these designs obey. This is true for cosmology (galaxies/stars/planets), to geology (sand dunes/mountains/continents), weather (clouds/climate/hydrology), biology (molecules/cells/tissues/organisms), and any other natural design out there.

- Scientific laws are unknowable. Only instances of these laws are known with any certainty. Mathematics is necessary but insufficient to determine the laws of physics and furthermore the laws of chemistry, biology, behavior, etc., meaning each of the narrower scientific laws has to be backwards compatible with the broader laws but does not derive from the more general laws. Aside from mathematics that do not depend on observations of nature, the ‘eternal’ and ‘universal’ attributes attached to the scientific laws are justified only as simplifying working assumptions, yet too often these are incorrectly taken as indisputable truths. Any confirming observation of a scientific law is nothing more than another instance that reinforces our mental model. But we will never know the actual laws, no matter how many observations we make. Conversely, a single contrary observation is enough to invalidate (or at least shake up) our model as happened historically with many of the scientific laws hypothesized.

- “One Designer” hypothesis is much more parsimonious compared to a sum of disparate and many unknown laws, particles, and “random” events. Since the only confirmed source of regularity (aka rules or laws) in nature is intelligence, it takes a much greater leap of faith to declare design a product of a zoo of laws, particles, and random events than of intelligence. Furthermore, since laws and particles are presumably ‘eternal’ and ‘universal’, randomness would be the only differentiator of designs. But “design by randomness” explanation is utterly inadequate especially in biology where randomness has not shown a capacity to generate design-like features in experiment after experiment. The non-random (how is it possible?) phantasm called “natural selection” fares no better as “natural selection” is not a necessity and in any case would not be a differentiator. Furthermore, complex machines such as the circulatory, digestive, etc. system in many organisms cannot be found in the nonliving with one exception: those designed by humans. So-called “convergent evolution”, the design similarity of supposedly unrelated organisms also confirms the ‘common design’ hypothesis.

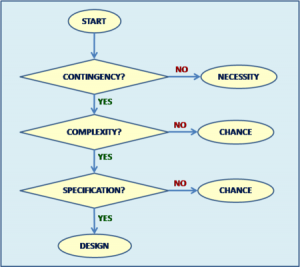

- How does this proposed Intelligent Design Detection Method improve Dembski’s Explanatory Filter? The proposed filter is simpler, uncontroversial

with the likely [important] exception of equating necessity with design, and is not dependent on vague concepts like “complexity”, “specification”, and “contingency”. Attempts to quantify “specified complexity” by estimating ”functional information” help clarify Dembski’s Explanatory Filter, but still fall short because design needs not implement a function (e.g. art) while ‘the function’ is arbitrary as are the ‘target space’, ‘search space’, and ‘threshold’. Furthermore, ID opponents can easily counter the functional information argument with the claim that the ‘functional islands’ are linked by yet unknown, uncreated, eternal and universal scientific laws so that “evolution” jumps from island to island effectively reducing the search space from a ‘vast ocean’ to a manageable size.

with the likely [important] exception of equating necessity with design, and is not dependent on vague concepts like “complexity”, “specification”, and “contingency”. Attempts to quantify “specified complexity” by estimating ”functional information” help clarify Dembski’s Explanatory Filter, but still fall short because design needs not implement a function (e.g. art) while ‘the function’ is arbitrary as are the ‘target space’, ‘search space’, and ‘threshold’. Furthermore, ID opponents can easily counter the functional information argument with the claim that the ‘functional islands’ are linked by yet unknown, uncreated, eternal and universal scientific laws so that “evolution” jumps from island to island effectively reducing the search space from a ‘vast ocean’ to a manageable size.

Summary

- Design is order imposed on parts of a system

- A system is nonrandom if we reject the null hypothesis: “the order we see is just an artifact of randomness”

- Current design detection method based on identifying the designer is circumstantial hence flawed

- Design is just a set of ‘laws’, making the design-vs-necessity distinction impossible

- Necessity is Design to the best of our knowledge

- Scientific laws are unknowable. Only instances of these laws are known with any certainty

- “One Designer” hypothesis is much more parsimonious compared to a sum of disparate and many unknown laws, particles, and “random” events

- This Intelligent Design Detection Method improves on Dembski’s Explanatory Filter

Pro-Con Notes

Con: Everything is explained by the Big Bang singularity, therefore we don’t need Intelligent Design.

Pro: How can a point of disruption where all our knowledge completely breaks down explain anything? To the best of our knowledge, Intelligent Design is responsible for that singularity and more.

Given that what creationists most often mean to do is present me with a false dichotomy (either “chance”–whatever they might mean, preferably if it allows for equivocation–or god-did-it), I always ask for clarifications and present them with several possibilities and why those possibilities might be wrongheaded. After that creationists prefer not to continue the conversation, as if their life depended on the false dichotomy and the potential equivocations.

Given my experience, I’d be very surprised if a creationists wanted to clarify meanings, when equivocation is the name of their game.

Also, anyone discussing those words has to take into account the way creationist debaters try to win-by-default by posing the two alternatives as either Design or change that is “purely by chance”, or “just random”. Of course any audience will automatically reject any suggestion that elaborate adaptations could arise by random change (such as random mutation).

So anyone who makes distinctions between “chance based” and “random” should have some qualifications in there to make clear that they are not saying that natural selection is a process that just randomly wanders around. Because if you have no such disclaimer you are lending yourself to the prevarications of those creationist debaters, and the misleading of their audiences.

People have been known to make predictions about systems that are unpredictable.

The very core of Darwin’s contribution is the idea that “accidental” happenings can look like the results of design when coupled with the harsh reality that structures that are less compatible with the surrounding environment are more likely to be eliminated over time.

That may be a “popularization” but I think it sums up with the fuss is all about.

peace

granted. but that was not in question.

I’m interested to know if modeling algorithms are necessary to make accurate predictions.

(I think) I would argue that when it comes to intelligent agents they are not.

peace

Natural selection is not really a process at all is it?. It just a way to say that things that are less compatible with the environment tend to be eliminated over time all things being equal.

It’s not a lot different than the obvious notion that rivers tend to smooth out the rough edges in the river bed.

peace

There is a “process” that does that to rocks in a river. The people who say that there is no process called natural selection are just like people who say that there is no average tendency for rivers to smooth out rough edges of rocks. And they are just like the people who would say that stuff just happens to rocks in the river, without acknowledging that there is any tendency to smooth the rocks as opposed to roughen them.

So I’m glad you understand that natural selection is an “obvious notion”.

I think you are splitting hairs here. My earlier comment was about systems that we describe as predicable. We can predict that the sun will rise, but we usually don’t describe sunrise as a predictable system.

When Dembski acknowledged that he was no longer using the explanatory filter, responding to a comment of mine (under some alias or another) at Uncommon Descent, he identified a flaw in the EF: chance and regularity are not mutually exclusive. He did not mention that others had said the same for years. But he was of course aware of what they’d been saying, and was tacitly admitting that they were right.

(Dembski’s subsequent “best thing since sliced bread” recantation was obviously forced, and was less obviously tongue-in-cheek. He never again referred to the explanatory filter. He accurately explained what was wrong with the EF in the comment he wrote in response to my comment.)

He’s objecting to “algorithm,” not “model.” His big “thing” is that naturalism entails algorithmic relations, and that intelligence is non-algorithmic. This puts him in a category of two. Considering that one of the two posts under his own name at TSZ, FMM might as well begin stating his views fully and plainly, and linking to stuff he’s put online under his real name. (No one has plastered the real names of DiEb, walto, and KantianNaturalist all over the site, even though many of us know their names.)

IMO This particular hair could just be the key to detecting design.

That is what I’m discussing as well.

The question I have is

Is there a place in a predictable system for an intelligent agent that can’t be reduced to an algorithm?

I do.

The motions of objects in our solar “system” is certainly predictable……..and it’s algorithmic in the sense that future events can be fully calculated based on the present physical reality.

The question is are all predictable systems similarity algorithmic.

I would argue that some systems are predictable but not algorithmic. It’s when we encounter those systems that we infer design.

peace

Personally, I think that is nonsense.

We use algorithms to predict the motions. But the motions themselves are not algorithmic.

I am just not comfortable posting my real name on an internet forum like this. I’m sorry if you don’t like that.

Besides this is just a hobby for me and not my job.

I make no appeal to authority my real name is irrelevant to my argument. Feel free to ignore me if that sort of thing is an issue for you.

I am in the middle of a very interesting exercise/experment in improving temperature prediction by looking for places where forecasting algorithms diverge from recorded actuals that presumably result from the personal quirks of those recording the data.

I’ll be sure to post some results here for discussion when I’m done.

peace

How do you know this? How would you possibly demonstrate it?

If our algorithmic models fully describe a system what else is necessary? Doesn’t Occam’s razor come into play?

play

The model building.

The building of the model is not the model any more than the building of the system is the system.

Now we are in Gödel territory

By the way I think Gödel’s incompleteness is highly relevant to the topic of design detection.

Do you think we could construct an all purpose algorithm to build algorithmic models?

peace

This statement is totally false. Natural selection has no creative power whatsoever, not even as analogy. The part of “evolution” that has any creative ability is the random mutations. Natural selection is only saying that some of those forms created by random mutations don’t survive. That is it. That is all natural selection does. Its like the judges at an American Idol contest claiming they created great singers by sending home those who they feel are bad singers. Its completely delusional.

The problem is that evolutionists have been using this teleological language for so long that they have totally forgotten the real meaning of their own theory. Its rampant in evolution speak.

Without a system how can you build a system?

Haha, the other possibility is?

Mother Nature did it? We don’t know? Its a secret? Go read a book? Emergence!

The algorithm applies to the model, not to reality itself.

No, we aren’t.

Your mistake.

No.

Yet somehow, in the case of stones in streams, you see a tendency to smooth the stones out, rather than roughen them. And consider that “obvious”. But don’t apply the same logic to evolution.

Joe, Joe, please read what I said. I said the natural selection part is not the part that is smoothing any stones out. Its the smoothing out the stone part that is smoothing out the stone.

Your analogy about natural selection, is like calling the people who go to view the stones, and pick them out of the river and putting them on their coffee table, as the ones doing the smoothing of the stones.

Joe Felsenstein,

And believe me Joe, I do understand why you often want to conflate the terms “natural selection” with the more vague term of “evolution”, because in that way you hope to avoid the random mutations as doing the creating problem. Its so much harder to make your sale if you have to explain that its accidents that do all the creation.

If a used car salesman is asked about the warranty on his cars, he would much prefer to say, “You know what I am going to do, I am going to give you a free under-coating, because I like you!”

You know that I did not suggest that you post your name at this site. Everything would be fine if you expressed your views as directly here as you do when posting under your own name. So here’s a simple suggestion: Assume that everyone already knows who you are. I believe that people will understand you much better if you post without any concern for concealing your identity.

Once upon a time, I published the best results anyone had ever reported for a heavily studied problem, forecasting annual sunspots counts. I combined the predictions of about 20 thousand neural nets. Know how I came by the class of neural nets that I applied? It was a programming error. I’d programmed neural nets so damned many times, I said to myself, “You don’t need to write out a design this time.” So I launched straight into coding, without a clear design, and screwed it up. When I looked at what I actually had implemented, I said, “Hmm, that’s interesting.” I’d have never thought of doing it on purpose.

I’m not aware anyone has attempted to disguise the fact that mutation is random in most senses of the word. What seems silly is to deny any role for the filtration process of NS in conditioning that which remains after the full round of variation-generation and sampling.

If you pass sand through a sieve, the grain sizes had already been determined before you started. The sieving process does nothing … and yet, you have a sieve full of the larger grains, and a bunch of the smaller ones below. Somehow, you have made the random nonrandom (in some senses of the word). Entirely, you would have it, ‘by accident’.

I would bet that lots of folks here think that systems can arise by random mutation as long as it’s pared with the idea that things that are less compatible with the environment tend to be eliminated all things being equal.

I would tend to think that it’s persons that create systems and they sometimes use systems to do so and sometimes they come up with them de novo out of nothing but their fruitful imagination.

peace

the model reflects reality or it’s of no use

yes we are 😉

I agree

But person’s can and do just that . It’s sort of what separates us from algorithmic things like robots and computer programs and solar systems and RM/NS.

That is the golden nugget at the core of design detection.

peace

How do you know I don’t already do that?

That is the difference between you and me

I believe people would understand much better if they weren’t so afraid of being somehow trapped into admitting the obvious against their will.

😉

peace

Such as that evolution is a fact, and common descent writ large in the genes? I know, it’s a toughie.

And sometimes persons put their fruitful imagination to other uses as well.

Are “you” a chatbot?

Allan Miller,

The amazing creative powers of a sand sieve. Perfect analogy.

Isn’t it wonderful that in English literature all the creativity has already done its work, because all 26 letters of the alphabet are already in existence?

Mirrors reflect. Models don’t.

Models need to fit reality well enough for the way that we are using them. The problem of fitting is often non-algorithmic.

Another terrific analogy.

BTW, what is a wxcrrtlspflip?

I created it by removing all the letters I didn’t want.

They should just admit they don’t know the underlying causes and that chance is just a filler word expressing ignorance.

Reminds me of how I got started posting here at TSZ.

Mung,

You know how I post at TSZ? I just don’t post all the things I don’t want to say, and what ever is left, that is what ends up here.

Just like evolution.

So it’s fundamentally a matter of arrogance. But what else would we expect of engineers who pronounce on evolution, evidently without reflecting on the fact that they’re just as ignorant of evolutionary biology as evolutionary biologists are of their particular fields of engineering?

(Nicola Tesla never had an invention come to him in a vision before he had put a great deal of conscious thought into it. The absence of awareness of thought at the time one solves a problem is not evidence of the absence of thought. Why did Alexander Graham Bell say that genius is one percent inspiration and ninety-nine percent perspiration? As Tesla himself noted, Bell didn’t have the theoretical foundation that Tesla had, and spent a bunch of resources needlessly on dead-end lines of experimentation. I’ve had so many solutions pop into my head as I stood at the urinal that I’ve said that I’d have been a great computer scientist, if only I had pissed more. Of course, what always preceded my experience of Urinary Imagination Sampling was having put off a trip to the restroom while struggling with a problem. I’d already come up with the pieces of the solution. I was locked into a wrong way of putting them together, and needed nothing so much as to stop trying for a moment. As for my one flash of insight that was publicly witnessed, an off-the-top-of-my-head proof of what came to be known as the No Free Lunch Theorem, I had, for six years, been teaching discrete-math students to count functions. So I happened to be very well rehearsed in precisely the line of thinking that I needed for the proof. In fact, I was primed to pursue that line of thought. It took me about a second, literally, to respond to Aspi Jimmy Havewala’s claim that “future work” in his area of thesis research might lead to a generally superior function optimizer.)

It’s the height of arrogance when you say that philosophers don’t think straight about naturalism, and spew authoritatively the notion that naturalism entails an algorithmic theory of mind, not to mention belief in an algorithmic universe. Did Jesus whisper as much to you when you were praying? Did you sample your precious little imagination? Or is it simply that your “Biblical worldview” empowers you to hold forth sagely on all sorts of subjects that you’ve never bothered to study intensively?

I don’t know about other people, or about “evolutionists,” whatever that might mean, but I know several underlying “causes” for, say mutations, and a couple underlying “causes” for, say, allele fixation. That creationists have trouble with them, or that they might prefer to think of the whole as meaning “merely random,” or “purely by chance,” or “is just accidents,” or whatever other way to shield themselves from understanding doesn’t mean that I don’t understand anything about evolution. It just means they’d rather not understand. The problem, therefore, is not with me, and, maybe, not even with “evolutionists,” whatever that might mean.

The analogy is not with ‘creation’, but with differential preservation of a random input. You still struggling with this? It’s dead easy.

In some areas of engineering, the output of a random source, input to a system in which something interesting happens, is called innovation. Pass random noise (innovation) through an appropriately shaped tube (or concatenation of cylindrical tubes varying in diameter), and you will get a recognizable speech sound out the end. It seems that phoodoo would localize the “creation” of the speech sound in the random noise source, and not the tube through which the noise passes.

What makes phoodoo’s line particularly annoying for me is that Dembski and associates have always identified natural selection as the “creative force” of Darwinian evolution. Considering that ID has been promoted as science for about three decades, one would expect the ID community to have arrived at consensus on some core scientific claims. The fact of the matter is that every single ID proponent expects us to remember his or her particular opinions on this, that, and the other. This is all the more amazing when one recalls that the very first project of the ID movement was to rewrite a high-school science textbook, Of Pandas and People, to create the (now obviously false) impression that there already existed a science of intelligent design.

I would counter that a mirror is in a sense a “model” of the thing that it is imaging. Not the thing itself but a useful proximity for a particular purpose. Perhaps represent would be a better term than reflect but you get the drift.

I don’t disagree. It’s even more evidence of the vast difference between what persons can readily accomplish that algorithms can’t do. It’s that difference that explains the design inference IMO.

peace

Might want to try another strategy .

what are you talking about?

It’s the opposite of arrogance it’s humble awe in the creative power of persons verses the weakness of algorithms when it comes to design.

I never once claimed it was. Why are you so cranky? I don’t treat you that way. Why don’t you try and relax a little?

Imagination is not remotely the same thing as the absence of thought. In fact it’s active thought. It’s just not the output of some sort of imagination algorithm.

Because he found it to be true. I would agree

The point is that algorithms are fundamentally incapable the necessary inspiration part.

algorithms are a very handy tool we can use minimize the perspiration part but tools are just that, tools and a tool never designed a telephone.

peace

An underlying cause plus overriding chance equals chance.

There are lots of underlying causes for a particular coin to come up heads but the end result is still effectively random.

peace

Nope just a fellow who sometimes feels the need to point out that words have meanings.

peace

Did you just say that Of Panda’s and People was a science textbook?

🙂

I would venture to guess that phoodoo like any rational individual would localize the “creation” of the speech sound in the mind of the fellow who designed the tubular contraption that the noise passes through.

Are you actually suggesting that the tubular contraption plus noise results in the creation of speech sounds?

If so that explains a lot

peace