ID proponents and creationists should not use the 2nd Law of Thermodynamics to support ID. Appropriate for Independence Day in the USA is my declaration of independence and disavowal of 2nd Law arguments in support of ID and creation theory. Any student of statistical mechanics and thermodynamics will likely find Granville Sewell’s argument and similar arguments not consistent with textbook understanding of these subjects, and wrong on many levels. With regrets for my dissent to my colleagues (like my colleague Granville Sewell) and friends in the ID and creationist communities, I offer this essay. I do so because to avoid saying anything would be a disservice to the ID and creationist community of which I am a part.

I’ve said it before, and I’ll say it again, I don’t think Granville Sewell 2nd law arguments are correct. An author of the founding book of ID, Mystery of Life’s Origin, agrees with me:

“Strictly speaking, the earth is an open system, and thus the Second Law of Thermodynamics cannot be used to preclude a naturalistic origin of life.”

Walter Bradley

Thermodynamics and the Origin of Life

To begin, it must be noted there are several versions of the 2nd Law. The versions are a consequence of the evolution and usage of theories of thermodynamics from classical thermodyanmics to modern statistical mechanics. Here are textbook definitions of the 2nd Law of Thermodynamics, starting with the more straight forward version, the “Clausius Postulate”

No cyclic process is possible whose sole outcome is transfer of heat from a cooler body to a hotter body

and the more modern but equivalent “Kelvin-Plank Postulate”:

No cyclic process is possible whose sole outcome is extraction of heat from a single source maintained at constant temperature and its complete conversion into mechanical work

How then can such statements be distorted into defending Intelligent Design? I argue ID does not follow from these postulates and ID proponents and creationists do not serve their cause well by making appeals to the 2nd law.

I will give illustrations first from classical thermodynamics and then from the more modern versions of statistical thermodynamics.

The notion of “entropy” was inspired by the 2nd law. In classical thermodynamics, the notion of order wasn’t even mentioned as part of the definition of entropy. I also note, some physicists dislike the usage of the term “order” to describe entropy:

Let us dispense with at least one popular myth: “Entropy is disorder” is a common enough assertion, but commonality does not make it right. Entropy is not “disorder”, although the two can be related to one another. For a good lesson on the traps and pitfalls of trying to assert what entropy is, see Insight into entropy by Daniel F. Styer, American Journal of Physics 68(12): 1090-1096 (December 2000). Styer uses liquid crystals to illustrate examples of increased entropy accompanying increased “order”, quite impossible in the entropy is disorder worldview. And also keep in mind that “order” is a subjective term, and as such it is subject to the whims of interpretation. This too mitigates against the idea that entropy and “disorder” are always the same, a fact well illustrated by Canadian physicist Doug Craigen, in his online essay “Entropy, God and Evolution”.

From classical thermodynamics, consider the heating and cooling of a brick. If you heat the brick it gains entropy, and if you let it cool it loses entropy. Thus entropy can spontaneously be reduced in local objects even if entropy in the universe is increasing.

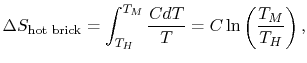

Consider the hot brick with a heat capacity of C. The change in entropy delta-S is defined in terms of the initial hot temperature TH and the final cold temperature TM:

Supposing the hot temperature TH is higher than the final cold temperature TM, then Delta-s will be NEGATIVE, thus a spontaneous reduction of entropy in the hot brick results!

The following weblink shows the rather simple calculation of how a cold brick when put in contact with a hot brick, reduces spontaneously the entropy of the hot brick even though the joint entropy of the two bricks increases. See: Massachussetts Institute of Technology: Calculation of Entropy Change in Some Basic Processes

So it is true that even if universal entropy is increasing on average, local reductions of entropy spontaneously happen all the time.

Now one may argue that I have used only notions of thermal entropy, not the larger notion of entropy as defined by later advances in statistical mechanics and information theory. But even granting that, I’ve provided a counter example to claims that entropy cannot spontaneously be reduced. Any 1st semester student of thermodynamics will make the calculation I just made, and thus it ought to be obvious to him, than nature is rich with example of entropy spontaneously being reduced!

But to humor those who want a more statistical flavor to entropy rather than classical notions of entropy, I will provide examples. But first a little history. The discipline of classical thermodynamics was driven in part by the desire to understand the conversion of heat into mechanical work. Steam engines were quite the topic of interest….

Later, there was a desire to describe thermodynamics in terms of classical (Newtonian-Lagrangian-Hamiltonian) Mechanics whereby heat and entropy are merely statistical properties of large numbers of moving particles. Thus the goal was to demonstrate that thermodynamics was merely an extension of Newtonian mechanics on large sets of particles. This sort of worked when Josiah Gibbs published his landmark treatise Elementary Principles of Statistical Mechancis in 1902, but then it had to be amended in light of quantum mechanics.

The development of statistical mechanics led to the extension of entropy to include statistical properties of particles. This has possibly led to confusion over what entropy really means. Boltzmann tied the classical notions of entropy (in terms of heat and temperature) to the statistical properties of particles. This was formally stated by Plank for the first time, but the name of the equation is “Boltzmann’s entropy formula”:

where “S” is the entropy and “W” (omega) is the number of microstates (a microstate is roughly the position and momentum of a particle in classical mechanics, its meaning is more nuanced in quantum mechanics). So one can see that the notion of “entropy” has evolved in physics literature over time….

To give a flavor for why this extension of entropy is important, I’ll give an illustration of colored marbles that illustrates increase in the statistical notion of entropy even when no heat is involved (as in classical thermodynamics). Consider a box with a partition in the middle. On the left side are all blue marbles, on the right side are all red marbles. Now, in a sense one can clearly see the arrangement is highly ordered since marbles of the same color are segregated. Now suppose we remove the partition and shake the box up such that the red and blue marbles mix. The process has caused the “entropy” of the system to increase, and only with some difficulty can the original ordering be restored. Notice, we can do this little exercise with no reference to temperature and heat such as done in classical thermodynamics. It was for situations like this that the notion of entropy had to be extended to go beyond notions of heat and temperature. And in such cases, the term “thermodynamics” seems a little forced even though entropy is involved. No such problem exists if we simply generalize this to the larger notion of statistical mechanics which encompasses parts of classical thermodynamics.

The marble illustration is analogous to the mixing of different kinds of distinguishable gases (like Carbon-Dioxide and Nitrogen). The notion is similar to the marble illustration, it doesn’t involve heat, but it involves increase in entropy. Though it is not necessary to go into the exact meaning of the equation, for the sake of completeness I post it here. Notice there is no heat term “Q” for this sort of entropy increase:

where R is the gas constant, n the total number of moles and xi the mole fraction of component, and Delta-Smix is the change in entropy due to mixing.

But here is an important question, can mixed gases, unlike mixed marbles spontaneously separate into localized compartments? That is, if mixed red and blue marbles won’t spontaneously order themselves back into compartments of all blue and red (and thus reduce entropy), why should we expect gases to do the same? This would seem impossible for marbles (short of a computer or intelligent agent doing the sorting), but it is a piece of cake for nature even though there are zillions of gas particles mixed together. The solution is simple. In the case of Carbon Dioxide, if the mixed gases are brought to a temperature that is below -57 Celcius (the boiling point of Carbon Dioxide) but above -195.8 Celcius (the boiling point of Nitrogen), the Carbon Dioxide will liquefy but the Nitrogen will not, and thus the two species will spontaneously separate and order spontaneously re-emerges and entropy of the local system spontaneously reduces!

Conclusion: ID proponents and creationists should not use the 2nd Law to defend their claims. If ID-friendly dabblers in basic thermodynamics will raise such objections as I’ve raised, how much more will professionals in physics, chemistry, and information theory? If ID proponents and creationists want to argue that the 2nd Law supports their claims but have no background in these topics, I would strongly recommend further study of statistical mechanics and thermodynamics before they take sides on the issue. I think more scientific education will cast doubt on evolutionism, but I don’t think more education will make one think that 2nd Law arguments are good arguments in favor of ID and the creation hypothesis.

Hear, hear.

stcordova on September 2, 2012 at 3:21 amsaid:

Gaskell’s example in Ch. 4.8 has nothing to do with an ideal gas. He discusses microstates of a crystal at a low temperature, in which atoms are perfectly ordered but may be of two distinguishable types. The atoms interact strongly with one another in order to form a periodic crystal. This changes the phase space profoundly. In an ideal gas, an atom can be anywhere inside the container. Energy restrictions only affect its momentum, but not the coordinate. In a crystal, an atom is restricted to stay in certain low-energy regions, maintaining a certain distance from its neighbors. At low energies, the discrete nature of the phase space comes into play and one only considers nearly perfect periodic arrangements of atoms with imperfections in the form of long-wavelength compressions and rarefactions (phonons). If all atoms were of the same type then the number of microstates in this system would be Ω1, or what Gaskell calls “thermal” entropy Ωth.

Let us now throw in two distinct types of atoms A and B and assume that the interactions between them are the same, independent of the type. This means that the atoms can’t be, say, Na and Cl. A better example would be identical atoms whose nuclear magnetic moments point up or down. In this case, a full description of a microstate of the crystal requires a specification of the phonon state (compressions and rarefactions of the lattice) and also identifying which atoms are of the A and B types. The two pieces of information (the state of the lattice and the atom types) are independent since, by assumption, the atoms interact with each other regardless of the type. The number of microstates is then Ω1Ω2, where Ω2 is the number of configurations with given numbers of A and B atoms. This number Ω2 is what Gaskell calls Ωconf. Ω1 depends on the system’s energy E, whereas Ω2 is insensitive to it. On the other hand, Ω2 depends on the number of atoms of type A or, alternatively, on the net magnetic dipole moment of the nuclei M, whereas Ω1 does not. The net entropy can be computed as

Gaskell’s terminology is imprecise. Both entropies S1 and S2 can give rise to thermal effects. Magnetic refrigeration is based precisely on the “configurational” part of entropy S2. A better choice would be to call S1 entropy associated with atomic motion and S2 entropy associated with the state of nuclear spins. That would be a mouthful, so I understand why Gaskell chose his terms. I personally would use the term “configurational” but I would not use the term “thermal,” for the reasons stated at the beginning of this paragraph.

You might also want to contrast the “scholarship” of Henry Morris with what Clausius actually wrote.

This is from Rudolf Clausius in Annalen der Physik und Chemie, Vol. 125, p. 353, 1865, under the title “Ueber verschiedene für de Anwendung bequeme Formen der Hauptgleichungen der mechanischen Wärmetheorie.” (“On Several Convenient Forms of the Fundamental Equations of the Mechanical Theory of Heat.”)

It is also available in A Source Book in Physics, Edited by William Francis Magie, Harvard University Press, 1963, page 234.

(Note: Q represents the quantity of heat, T the absolute temperature, and S will be what Clausius names as entropy)

Clausius apparently translates η τροπη from the Greek as Umgestaltung and not Umdrehung. However, this doesn’t matter because he modified the word to entropy for the reasons he indicated.

On the other hand, here is Henry Morris’s pseudo-scholarship back in 1973.

Thank you, that is very informative and invaluable. And thanks for taking the time to review what Gaskill said.

[below is my understanding so far, corrections are welcome]

My understanding of entropy is that it is the logarithm of microstates, or as Olegt put it:

Symbolically, Boltzmann and Planck described entropy in the following manner through statistical mechanics:

where S is the entropy is the number of microstates and

is the number of microstates and  is Boltzmann’s constant.

is Boltzmann’s constant.

By way of extenstion, the change in entropy can be related to the change in the number of microstates:

Perhaps one thing to note is that the number of microstates increases with the number of particles involved (all other factors being held equal).

Contrasting the description of entropy in statistical mechanics we have the description of entropy in classical thermodynamics (pioneered individuals like Joule and Claussius):

where T is temperature and Q is heat.

My understanding is that the breakthrough was tying classical thermodynamics (as pioneered by individuals like Clausius) to statistical mechanics (pioneered by individuals like Boltzmann and Gibbs). Symbolically:

in the appropriate domains. There are some examples where this equality will not hold, such as the entropy of mixing, but that the two notions could ever be related was remarkable. Some have likened this breakthrough linking thermodynamics to mechanics as a breakthrough comparable to Einstein relating energy to mass and the speed of light:

To help dispel the notion that increasing entropy implies increasing disorder, let us take familiar examples:

1. An embryo developing into an adult. As the individual acquires more weight (mass) it acquires more entropy since the number of microstates available to the individual increases with the number of particles in the individual, and as the number of his microstates increases so does his entropy. So as the individual transforms dead nutrients into more biologically “ordered” molecules, his entropy increases! In fact, trying to reduce his entropy may kill him (via starvation).

2. factories which make computers and automobiles and airplanes. As energy is put into the manufacture of these “ordered” systems, entropy of the universe of necessity goes up.

Examples of order increasing as entropy increases abound. Increase in entropy, in many cases, is a necessary but not sufficient condition to create “order” (however one wishes to define the subjective notion of “order”).

In sum, it could be argued that existence and increase of biological order are correlated with increases in universal entropy, and in some cases even local entropy, hence the 2nd law of thermodynamics cannot be used as an argument against evolution because clearly increases in order are often accompanied by increases in entropy.

HT:

Mike Elzinga for the increasing weight example.

My favorite analogy to demonstrate that entropy and disorder are not synonymous: Imagine two decks of cards, freshly purchased from the store. Card-decks come from the factory fully sorted, with each suit segregated from all the other suits, and each suit’s cards sorted from from Ace to King within the suit; both card-decks, therefore, have equal amounts of order (and equal amounts of disorder, for that matter), and they both have equal thermodynamic entropy.

Take one deck, call it Deck A, and put it in your freezer. As its temperature drops, so, too, does its thermodynamic entropy decrease. Will Deck A’s level of disorder decrease to the same degree as its thermodynamic entropy decreases?

Take the other deck, call it Deck B, and shuffle it. Clearly, Deck B’s disorder has increased. Will Deck B’s level of thermodynamic entropy increase to the same degree as its disorder increases?

I don’t expect Mr. Cordova to ‘play nice’ — to my mind, that Darwin-abused-animals episode tells us everything one might wish to know about Mr. Cordova’s attitude towards truth-telling — but perhaps other people might find my analogy useful in some way.

This is where you will discover what I have often referred to as the fundamental misconception underlying all of ID/creationism.

Matter condenses; it condenses at all levels of complexity. In order for matter to condense and stick together, energy must be released; the second law of thermodynamics is central to this process. If energy couldn’t be spread around, interactions among matter of all forms would be totally elastic; there would be nothing but a quark/gluon “plasma.”

And now comes the really interesting part. Everything in physics, chemistry, and biology points to life emerging from the condensation of matter. We don’t know what actually happened yet; but there are no laws of physics and chemistry that stand in the way. All the complexities of condensed matter and organic chemistry and the facts of biology point inexorably in that direction. There may be many ways this can happen. It may be happening right under our noses but we haven’t spotted it yet.

Henry Morris wanted the second law of thermodynamics to be that fundamental law of physics that stood in the way of the origins of life and evolution in order to preserve a rather restricted set of sectarian beliefs. However, once you dig into it, you discover that the facts are entirely different; the second law of thermodynamics is required for the existence of life.

Once one really grasps that fact, all of the attempts of ID to fix creationist misconceptions about thermodynamics and the second law, by resorting to “information” and “complex specified information” and “intelligent design,” go right out the window. It is pretty clear that matter does this on its own; and that is a really interesting fact about our universe. How various religions handle this is different from religion to religion; there are literally thousands of religions and interpretations of religions among humans.

ID/creationists will not grasp this. They have had the wrong ideas about thermodynamics and the behavior of matter already burned into their amygdalas going all the way back to at least Henry Morris and Duane Gish, if not to A.E. Wilder-Smith. This will be not only your battle with other ID/creationists; it will be a battle within yourself. Some sectarian beliefs simply cannot accommodate this fundamental fact of nature. But who are humans to be dictating to deities what deities should have done in making universes?

This is part of what you will encounter if you have the honesty and courage to dig deeper. Other parts of this will involve your long-established relationships with your sectarian cohorts. It’s the road you will have to travel if you really want to dig into that 50-year pile of misconceptions and misrepresentations of scientific concepts you inherited from the ID/creationist community. There are many more in that pile.

You have a reputation, however, that suggests to many on the internet that this may not go in the direction of enlightenment. Even your cohorts over on UD are suspicious of your focus on yourself. But we shall see; sometimes people learn something important enough that they really do change.

It’s not for me to tell you what to do. I don’t tell people how to deal with deities. Everybody starts at a different place and travels whatever paths open up for them in the course of their lives. Nobody has time to explore everything even if they want to.

It’s a very nice example. It contains both kinds of elements about which people discuss order. One is a legitimate example of order, by convention, of things that don’t interact among themselves, the other being an example of something to which people erroneously attribute order/disorder but which interact strongly among themselves.

Sal, I don’t think it’s “ceremonially in jest” at all, you would give parity / legitimacy to a website that suppresses opposing viewpoints, and I don’t think that’s what we’re about. You’ve been afforded more privilege and courtesy than your reputation warrants. So I’d like you to reconsider what you’ve said, or I’ll ask Liz to reconsider you moderation / posting privileges. Even odious planks like KF deserve a forum. Don’t be using this as a forum to score points with your UD buddies. I don’t think that’s what this community is about.

Rich makes an excellent point, Sal. I’d make it even more strongly: The rules of this blog do not allow arbitrary banning. In that Lizzie demonstrates a far greater respect for open discussion than has ever been shown by any UD moderator. Suggesting that the two fora are equivalent, even in jest, is insulting.

Uh-oh, Mike and Oleg. Your student is trying to run again before learning to crawl:

A Designed Object’s Entropy Must Increase for Its Design Complexity to Increase – Part 1

🙂

Yeah; that’s what I figured he might do. Judging from his “disagreements” with the concepts in statistical mechanics, it occurred to me that he might be looking for a “new and improved” thermodynamics argument for ID. That could end up being quite funny.

One has to wonder if any of those characters over at UD will have anything to say in response. Those KF and BA77 characters may try to dump a few semi-trailer loads of copy/paste “refutation” on him.

I think I’ll just sit back and watch.

I just glanced over there and between Sal and kairosfocus it’s getting fractally wrong at an exponential pace. It’s like watching an inadvertent Gish Gallop where even the people doing it have lost control.

That’s making a virtue of necessity, I think. The time required to correct all of the misconceptions already present would be prohibitive.

I don’t have high hopes for this project. gpuccio gets it totally wrong when he says

As we have discussed more than once here, Shannon’s entropy does not apply to a single object (such as a string). It describes an ensemble. Complexity of a single string is usually evaluated in terms of Kolmogorov’s complexity.

And this passage should earn its author 10 points on the crackpot scale (see number 10):

And Sal suprises no-one. Gentlemen, don’t feed the parasites willingly.

🙂

olegt wrote:

As we have discussed more than once here, Shannon’s entropy does not apply to a single object (such as a string). It describes an ensemble. Complexity of a single string is usually evaluated in terms of Kolmogorov’s complexity.

My understanding is that even that is not quite so. If I understand correctly (and I’m sure I’ll quickly be told if I don’t) the Kolmogorov argument identifies the string’s complexity with the length of the shortest program that could be written to compute that string. However no particular programming language is specified for that — the argument just shows that there are at most 4 programs that are 2 bits long, 8 that are 3 bits long, etc. And it then notes that most numbers have programs almost as long as they are. It is a very clever and correct argument but leaves us without a way to actually compute the number for a single string. So it too is defined with respect to the whole set of possible strings and not well-defined for a single one.

There is a similar issue with respect to image compression — there cannot be more than 1024 images that compress down to a 10-bit string. But which ones they are is left undetermined by that argument.

They seem to be trying to use information and/or entropy to say something about the impossibility of the origin of a self-replicating system, or something about “islands of function” in a genotype space.

The one thing one can say is that neither of these have anything to do with the arguments put forward by Henry Morris or Granville Sewell. (Though to the extent that those latter arguments were incoherent they support anything and everything — perhaps there is a principle that all wrong arguments imply each other).

Actually, Kolmogorov complexity is defined with respect to a specified description language, so it does have a definite value for any particular string and language pair. Of course, the KC of a string with respect to one language may differ greatly from its KC with respect to a different language, and if string A has greater KC than string B with respect to one language, it’s always possible to find another description language in which string A’s KC is less than that of string B.

OK, I stand corrected. The distribution of KC values can be found without knowing what the description language, so we can say without knowing that language that most strings have KC almost as large as they are long.

The attempt to connect “information” with the entropy of a thermodynamic system doesn’t buy anything new. One doesn’t “know” any more than what one gets from the entropy. Attempting to say that high entropy in a specific system reflects “ignorance” of something doesn’t say anything more than that the microstates are more equally probable. What is one “ignorant” of in such a case? What does one “know” better if the entropy is lower?

The “knowledge” about a system comes from the agreement of the entropy calculations with experimental data; better agreement simply means one has a better theoretical model of the system.

Attempting to equate entropy with “information” also misrepresents how the concept of entropy is actually used in making connections among thermodynamic state variables.

In the ID/creationist subculture, “information” somehow reaches into “ideal gases” of non-interacting atoms and molecules, pushes them around, and guides them into highly improbable arrangements.

Now it seems they think that, without “information,” “specified function” is impossible to achieve out of ideal gases of atoms and molecules. So if entropy is about a “lack of information,” then arrangements cannot be achieved. So apparently low entropy is about to become evidence for “intelligence” at work.

Watching the confusion is interesting up to a point; but the confusion is not new. They are already convinced that goddidit. They’re still thrashing around for a pseudoscience to make their dogma “superior” to all others.

Granville is back!

Other Types of Entropy

It’s like viewing the inner musings of a cargo cult in slow motion. 🙂

I honestly don’t think I what posted at UD in my 2 part series on Entorpy and Design Complexity is anything that was against mainstream science, and in fact was highly critical of creationism, not evolution.

What I said ought to apply to man-made designs irrespective of what one believes about evolution or creation. I tried to be faithful to what I learned in this thread. I provided links to Mike’s concept test, and upheld it as something I view as true.

Anyway, here is one opinion of the source of the “order/disorder” meme.

Boltzmann

Excellent reference, Sal. Have you posted it in Granville Sewell’s latest thread on UD?

I have not. I’m on thin ice, Patrick. I’m not getting a lot of love from either side of this debate right now (Creationists or Darwinists). What I had to say I’ve posted in my threads. I won’t heckle my friend out of respect for him.

I’ve said what I wanted to say publicly at UD. I posted those quotes on my threads.

I’m freer to say some things since I have no reputation worth defending. 🙂

Sal,

Re-reading my question, I see how it could be interpreted as snarky. That was not my intention.

As Mike Elzinga has pointed out a few times here, the misconception of entropy as disorder is altogether too common even in textbooks. It will take some time to correct that. Part of that process is to make note of the error wherever it occurs.

Sewell’s reputation is his concern, not yours. If he values it, he should be more careful when opining outside his area of expertise.

If you speak truth to (perceived) power, you might soon find that you do have a reputation worth defending.

In my mind, this raises the question, what is entropy really? Bear in mind I last studied physics at the age of 16. I have no difficulty (as far as I can say about my own abilities) grasping the idea of heat, temperature, mass, momentum, velocity but never understood entropy. How is the concept of entropy useful? Does it have practical applications other than engineering problems with steam?

There is always a problem when one rips comments such as Boltzmann’s out of a much larger historical context. Unless you know the detailed history of Boltzmann’s efforts and tribulations along with the issues dealt with by Maxwell, Gibbs, and others, such quote-mining simply leads to further misconceptions and misrepresentations.

Gibbs already knew, in his 1875–78 Heterogeneous Equilibrium, about the many apparent paradoxes with the entropy of mixing and the problems surrounding ideal gases. In addition, all this came well before quantum mechanics and even before everyone was convinced of the atomic nature of matter. Yet Gibbs already had a pretty good handle on it.

Nearly all physicists today are acutely aware of such issues and their history. That is why ideal gases are given only brief mention early in a beginning thermodynamics and statistical mechanics course and instead the concept of enumeration of energy states is emphasized early on. One also has to deal with the mathematical issues.

What you are experiencing over at UD are the usual copy/paste dumps of cobbled-together quote mines by people who have no idea about what any of it means but who are simply painting a picture that “supports” sectarian dogma.

And all those connections with “information” don’t buy anyone anything that one doesn’t already know by just knowing the entropy. Asserting that entropy is about “lack of information” is a non-sequitur. Lack of information about what; you don’t know what microstate a system is in? How is that better than saying that the probabilities of states are distributed more uniformly? And have you not encountered Maxwell-Boltzman, Fermi-Dirac, and Bose-Einstein distributions? What is it that one doesn’t know?

This is not how entropy is actually used in physics; but knowing how to enumerate energy states so that the calculations involving entropy don’t result in disagreements with experimental data gives clues to better and more detailed models of thermodynamic systems. That kind of effort is always involved as physicists extend these ideas to more complex systems.

You copy/pasted the Sackur-Tetrode equation over there at UD. Why did you do that? Do you have any idea of its history? Can you explain why it says that entropy goes to negative infinity as the temperature goes to zero? Do you have any idea of what that implies, or how to explain it?

You are in way over your head trying to “debate” your cohorts over there. They already have the picture about entropy that they want. Sewell is looking for the “generalized entropy” that will do the job of forbidding evolution and the origins of life. He will have to invent it; it serves the purpose of propping up ID and there is nothing you can do to change that. It’s sectarianism, not science.

It is the logarithm of the number of energy microstates consistent with the macroscopic state of a thermodynamic system. The reason for the logarithm is because of how it changes with the total internal energy of the system and because it also makes the entropy extensive; namely it scales with the number of particles and with the volume of the system.

Everything after that is figuring out how to enumerate those states. This is the really hard part. Counting may involve correlations that make the entropy of the parts of a system different from the entropy of the total. Those are technical details.

But knowing this allows one to link together the variables that describe the macroscopic state of the system (variables such as empirical temperature, volume, pressure, magnetization, number of particles, etc.).

Remember, entropy is simply a name given to a mathematical expression involving the quantity of heat and the temperature. This is not unusual; names are given to reoccurring mathematical expressions all the time. Clausius picked the name for the reasons he gave in that excerpt I showed above. There is no emotional baggage connected with the name.

Knowing how it is calculated from the microscopic perspective of a system gives us a better handle on what is actually going on at that level. But this is no different from any other mathematical analysis of a physical system. It’s just another handle.

Thanks for the reply, Mike.

I have a vague recollection about the “heat death” of the universe, where temperature and particle densities become evenly spread out so that no useful work can be extracted. Wikipedia article mentions entropy alot!

Mike,

I didn’t copy and paste the Sakur-Tetrode equation, I actually mis-transcribed it (I used Planck’s constant not the reduced Plank’s constant). I have to correct that at UD.

The reason I posted it was to highlight that unlike the 747 aluminum example, where standard state entropy was dependent on the amount of aluminum (the variable N with fixed E and V), that there are situations where entropy depends on V and E.

I posted it to also resolve a miscommunication about the phrase “spatial configuration”. We may have been working from different definitions.

If one means by ‘spatial configuraiton’ the ordering or disordering of the arrangements of particles, then entropy has no dependence on that. In agreement with the claim I cited:

However if one means by “spatial configuration” the Volume of a system, then entropy has dependence on volume.

My understanding is that Sakur-Tetrode is an approximation which is valid only in certain domains.

My professor’s printed lecture notes and the treatment in Pathria and Beale are my primary familiarity with its history. I was confused with by the derivations, and a couple days ago I finally found a treatment of the topic that I could actually uncoil:

Classical Ideal Gas and Sakur-Tetrode

Anyway, it was providential that I posted the Sakur-Tetrode equation since KairosFocus was trying to argue from the example of a ideal monoatomic gas. You can see how I dealt with his argument:

Comment 432565

He responded in the way he usually does.

Anyway, I’m not posting at UD to persuade KairosFocus or some of the others. Blogs are for amusement, not real science. They are a way to extend ones thought process. That’s all. I doubt few minds are ever changed in internet debates. For myself, however, I feel I’ve learned and reinforced some of my understanding of basic physics through these discussions.

Sal

So apparently you would argue that the concept of averaging is about ages? Or about lizard tails?

What is the volume of that two-state system in the concept test? What is its spatial configuration?

Why does the entropy go to negative infinity when the temperature goes to zero in the Sackur-Tetrode equation? What is this telling you?

Mike,

Is the Sakur-Tetrode equation wrong in every domain? I’ve accepted it as true. Do you have issue with my acceptance of it?

Volume is not in your example in counting microstates. And if we presume we are dealing with the solid state where the position of paritcles is fixed, volume seems to be pretty much a non factor in counting of microstates.

Sal

Given that Sakur-Tetrode deals with matter in the gaseous state, it seems rather moot to try to talk about the behavior of gases as they approach absolute zero since the many monoatomic species will likely no longer be in the gaseous state a those temperatures. So it would seem the presumption is the appropriate domain of Sakur Tetrode is for the gaseous state.

I am aware of other “gases” than those we traditionally say are gases, but if we’re dealing with something like neon, the Sakur-Tetrode equation wouldn’t even be worth invoking at or near absoute zero since neon will have long since gone out of the gaseous phase below about 27 Kelvin.

Finally, the ideal gas is exactly that, it isn’t strictly speaking a real gas.

OK; a little bit of progress. In reality, matter interacts with matter. How does it do that?

How can inert gases condense into liquids and solids? What do you think is going on with those atoms?

After you have thought about that, let’s address the tornado-in-a-junkyard issue by doing an order-of-magnitude calculation.

Look up in your high school physics book how to calculate the gravitational potential energy and the electrical potential energy between, say, two protons. Calculate the ratio of electrical potential energy to gravitational potential energy.

See if you can scale up an attractive potential energy of, say, 1 eV at a separation of 1 nanometer to what it would be for a kilogram mass with the same ratio of electrical potential energy to gravitational potential energy in a similar attractive well at a separation of, say, 1 meter.

What would constitute an attractive potential well for something scaled up like this? How can such attractive wells be made, and what is required for particles to bind into stable structures, i.e., to fall into potential wells and stay there?

What do you think a tornado would do to masses bound together by this kind of energy? What kind of tornado would you need? What would happen to the “scrap” after that kind of tornado left the area?

Why do you suppose entropy deals with energy states and not necessarily “configuration” or volume?”

Clarification; You need to calculate that separation; it should be roughly on the order of a nanometer.

Here are some facts.

Chemical bonds are on the order of 1 eV (think of a dry cell at 1.5 volts). Binding energies of solids such as iron are on the order of 0.1 eV at room temperature. Atomic separations are on the order of a nanometer. You can use 0.1 eV if you like. We are doing only order of magnitude anyway.

Oops; posted this to the wrong place. Try again.

Clarification; You need to calculate that separation; it should be on the order of a nanometer.

Here are some facts.

Chemical bonds are on the order of 1 eV (think of a dry cell at 1.5 volts). Binding energies of solids such as iron are on the order of 0.1 eV at room temperature. Atomic separations are on the order of a nanometer. You can use 0.1 eV if you like. We are doing only order of magnitude anyway.

I have in the past mused over at UD about how the Design enthusiasts (almost exclusively non-chemists) imagine the ‘design’ process actually working. It’s one thing to have a blueprint, quite another to implement it. When people’s sphere of experience involves stitching together lines of code, or designing jets, they are dealing with static, macro-world objects that can be manoevred into place bit by bit, go grab a coffee and a donut, take your time till the final bit is in place … it does not have to work till you flick the switch.

But with molecular systems, it is a completely different world. Constructing various multi-part ‘minimum blueprint for a cell’ scenarios requires that the parts be brought into position but somehow persuaded not to react until you want them. Which appears to be an impossible task that simplistic ‘intelligent informatic arrangement’ is not up to overcoming. There are all these free energy gradients all over the place. It is akin to constructing a jumbo in mid-flight, from hyper-magnetised parts in electrical contact with the wiring, some of which explode on contact with oxygen or water, aviation fuel spilling everywhere …

A Designer could do it bit by bit, of course. In which case the asserted minimal system is not a minimal system, either way.

Indeed, the issues of actually dealing with matter directly would dispel all the objections and red herrings of the ID/creationists.

Just the daily experience of watching water adhere to glass and bead up on a waxed surface would alert most curious individuals to wonder why. And it is not hard to observe that it is temperature dependent as well. The positive or negative meniscus of a liquid in contact with a solid has been known for centuries. Most of the techniques related to producing a good weld or solder joint are concerned with the processes of wetting.

Anybody who has taken a basic chemistry course becomes aware almost immediately of all the lab techniques related to pouring things, boiling things, stirring and mixing things, titrating things, and producing chemical reactions with a given stoichiometry. Chemists have known, for at least a couple of centuries now, about compounds made up of integer ratios of elements.

In the labs of even basic physics courses one has to deal with friction in order to do most of the basic mechanical experiments and demonstrations.

One of the common features of ID/creationist assertions is that they can be shown to be false with just a knowledge of basic high school physics, chemistry, and biology; and in many cases ID/creationist assertions are simply counter to facts children learn in 8th grade science.

Yet is it a common feature of ID/creationist arguments to “up the ante” by attempting to “refute” people with mathematical calculations done with formulas pulled out of the air without understanding. If one does a simple high school level calculation that refutes an ID/creationist claim, the ID/creationists will pull up an equation from advanced physics and plug-and-chug a “refutation” without having the slightest idea what the formula means or of the contexts in which it is used.

They get it all wrong, but they get it wrong with an “authoritative” flourish. This is a “debating” tactic that has nothing to do with understanding the underlying science; and it has been honed and taught by Henry Morris and Duane Gish specifically for debate venues where winning is the goal and knowledge and facts about the real universe are irrelevant.

One of the most common traits I have observed in ID/creationist debaters over the last 40+ years is that not one of them appears to be capable of observing and wondering about the common phenomena that people have observed for centuries; phenomena that have prompted people to muse, “I wonder why that happens; I think I will try to find out.”

The ID/creationist world is the world of fawning over and quoting authority; whose mommy or daddy or authority figure carries the most weight in making assertions about what is or is not true. Those “authority figures” will be quote-mined endlessly for “refutations.” This is why we see so much copy/paste in “debates” with ID/creationists on the internet. It is the world of “exegesis,” “hermeneutics,” “etymology,” and mindless word gaming. One cannot make references to everyday phenomena because they don’t pay attention to everyday phenomena; they see only “authority.” It’s why no scientist should ever debate them, but might, however, benefit from studying their socio/political tactics instead.

FWIW,

I discussed single particle entropy here:

As an addendum, it is harder to establish dQ/T for single particle entropy unless one appeals to AVERAGE behavior over many trials, one thought experiment is too small a sample size, one needs many hypothetical thought experiments to get the law of large numbers to work for the Claussius definition.

Single particle entorpy works nicely however for the Boltzmann definition.

You can also plug single particles into the Sakur-Tetrode qunatum mechanical version of the Boltzman equation for idealized monoatomic gases.