The pattern that signifies Intelligence?

Winston Ewert has a post at Evolution News & Views that directly responds to my post here, A CSI Challenge which is nice. Dialogue is good. Dialogue in a forum where we can both post would be even better. He is extremely welcome to join us here 🙂

In my Challenge, I presented a grey-scale photograph of an unknown item, and invited people to calculate its CSI. My intent, contrary to Ewert’s assumption, was not:

…to force an admission that such a calculation is impossible or to produce a false positive, detecting design where none was present.

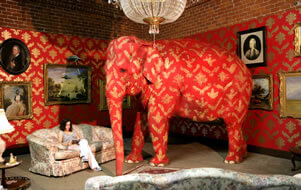

but to reveal the problems inherent in such a calculation, and, in particular, the problem of computing the probability distribution of the data under the null hypothesis: The eleP(T|H)ant in the room

In his 2005 paper, Specification: the Pattern that Signifies Intelligence, Dembski makes a bold claim: that there is an identifiable property of certain patterns that “Signifies Intelligence”. Dembski spends the major part of his paper on making three points:

- He takes us on a rather painful walk-through Fisherian null hypothesis testing, which generates the probability (the “p value”) that we would observe our data were the null to be true, and allows us to “reject the null” if our data fall in the tails of the probability distribution where the p value falls below our “alpha” criterion: the “rejection region”.

- He argues that if we set the “alpha” criterion at which we reject a Fisherian null as 1/[the number of possible events in the history of the universe], no way jose will we ever see the observed pattern under that null. tbh I’d be perfectly happy to reject a non-Design null at a much more lenient alpha that that.

- He defines a pattern as being Specified if it is both

- One of a very large number of patterns that could be made from the same elements (Shannon Complexity)

- One of a very small subset of those patterns that can be defined as, or more simply than the pattern in question (Kolmogorov compressibility)

He then argues that if a pattern is one of a very small specifiable subset of patterns that could be produced under some non-Design null hypothesis, and that subset is less than 1/[the number of possible events in the history of the universe] of the whole set, it has CSI and we must conclude Design.

The problem, however, as I pointed out in a previous post, Belling the Cat, is that the problem with CSI is not computing the Specification (well, it’s a bit of a problem, but not insuperable) nor with deciding on an alpha criterion (and, as I said, I’d be perfectly happy with something much more lenient – after all, we frequently accept an alpha of .05 in my field (making appropriate corrections for multiple comparisons) and even physicists only require 5 sigma. The problem is computing the probability of observing your data under the null hypothesis of non-Design.

Ewert points out to me that Dembski has always said that the first step in the three-step process of design detection is:

-

Identify the relevant chance hypotheses.

-

Reject all the chance hypotheses.

-

Infer design.

and indeed he has. Back on the old EF days, the first steps were to rule out “Necessity” which can often produce patterns that are both complex and compressible (indeed, I’d claim my Glacier is one) as well as “Chance”, and to conclude, if these explanations were to rejected, Design. And I fully understand why, for the sake of algebraic elegance, Dembski has decided to roll Chance and Necessity up together in a single null.

But the first task is not merely to identify the “relevant [null] chance hypothesis” but to compute the expected probability distribution of our data under that null, which we need in order to compute the the probability of observing our data under that null, neatly written as P(T|H), and which I have referred to as the eleP(T|H)ant in the room (and, being rather proud of my pun, have repeated it in this post title). P(T|H) is the Probability that we would observe the Target (i.e. a member of the Specified subset of patterns) given the null Hypothesis.

And not only does Dembski not tell us how to compute that probability distribution, describing H in a throwaway line as “the relevant chance hypothesis that takes into account Darwinian and other material mechanisms”, but by characterising it as a “chance” hypothesis, he implicitly suggests that the probability distribution under a null hypothesis that posits “Darwinian and other material mechanisms” is not much harder to compute than that in his toy example, i.e. the probability distribution under the null that a coin will land heads and tails with equal probability, in which the null can be readily computed using the binomial theorem.

Which of course it is not. And what is worse is that using the Fisherian hypothesis testing system that Dembski commends to us, our conclusion, if we reject the null,is, merely that we have, well, rejected the null. If our null is “this coin is fair”, then the conclusion we can draw from rejecting this null is easy: “this coin is not fair”. It doesn’t tell us why it is not fair – whether by Design, Skulduggery, or indeed Chance (perhaps the coin was inadvertently stamped with a head on both sides). We might have derived our hypothesis from a theory (“this coin tosser is a shyster, I bet he has weighted his coin”), in which case rejecting the null (usually written H0), and accepting our “study hypothesis” (H1) allows us to conclude that our theory is supported. But it does not allow us to reject any hypothesis that was not modelled as the null.

Ewert accepts this; indeed he takes me to task for misunderstanding Dembski on the matter:

We have seen that Liddle has confused the concept of specified complexity with the entire design inference. Specified complexity as a quantity gives us reason to reject individual chance hypotheses. It requires careful investigation to identify the relevant chance hypotheses. This has been the consistent approach presented in Dembski’s work, despite attempts to claim otherwise, or criticisms that Dembski has contradicted himself.

Well, no, I haven’t. I’m not as green as I’m cabbage-looking. I have not “confused the concept of Specified Complexity with the entire design inference”. Nor, even am I confused as to whether Dembski is confused. I think he is very much aware of the eleP(T|H)ant in the room, although I’m not so sure that all his followers are similarly unconfused – I’ve seen many attempts to assert that CSI is possessed by some biological phenomenon or other, with calculations to back up the assertion, and yet in those calculations no attempt has been made to computed P(T|H) under any hypothesis other than random draw. In fact, I think CSI, or FCSI, or FCO are a perfectly useful quantities when computed under the null of random draw, as both Durston et al (2007) and Hazen et al 2007 do. They just don’t allow us to reject any null other than random draw. And this is very rarely a “relevant” null.

It doesn’t matter how “consistent” Dembski has been in his assertion that Design detection requires “careful investigation to identify the relevant chance hypothesis”. Unless Dembski can actually compute the probability distribution under the null that some relevant chance hypothesis is true, he has no way to reject it.

However, let’s suppose that he does manage to compute the probability distribution under some fairly comprehensive null that includes “Darwinian and other material mechanisms”. Under Fisherian hypothesis testing, still, all he is entitled to do is to reject that null, not reject all non-Design hypotheses, including those not included in the rejected “relevant null hypothesis”.

Ewert defends Dembski on this:

But what if the actual cause of an event, proceeding from chance or necessity, is not among the identified hypotheses? What if some natural process exists that renders the event much more probable than would be expected? This will lead to a false positive. We will infer design where none was actually present. In the essay “Specification,” Dembski discusses this issue:

Thus, it is always a possibility that [the set of relevant hypotheses] omits some crucial chance hypothesis that might be operating in the world and account for the event E in question.

The method depends on our being confident that we have identified and eliminated all relevant candidate chance hypotheses. Dembski writes at length in defense of this approach.

But how does Dembski defend this approach? He writes

At this point, critics of specified complexity raise two objections. First, they contend that because we can never know all the chance hypotheses responsible for a given outcome, to infer design because specified complexity eliminates a limited set of chance hypotheses constitutes an argument from ignorance.

In eliminating chance and inferring design, specified complexity is not party to an argument from ignorance. Rather, it is underwriting an eliminative induction. Eliminative inductions argue for the truth of a proposition by actively refuting its competitors (and not, as in arguments from ignorance, by noting that the proposition has yet to be refuted). Provided that the proposition along with its competitors form a mutually exclusive and exhaustive class, eliminating all the competitors entails that the proposition is true.

But eliminative inductions can be convincing without knocking down every conceivable alternative,a point John Earman has argued effectively. Earman has shown that eliminative inductions are not just widely employed in the sciences but also indispensable to science.

Even if we can never get down to a single hypothesis, progress occurs if we succeed in eliminating finite or infinite chunks of the possibility space. This presupposes of course that we have some kind of measure, or at least topology, on the space of possibilities.

Earman gives as an example a kind of “hypothesis filter” whereby hypotheses are rejected at each of a series of stages, none of which non-specific “Design” would even pass, as each requires candidate theories to make specific predictions. Not only that, but Earman’s approach is in part a Bayesian one, an approach Dembski specifically rejects for design detection. Just because Fisherian hypothesis testing is essentially eliminative (serial rejection of null hypotheses) does not mean that you can use it for eliminative induction when the competing hypotheses do not form an exhaustive class, and Dembski offers no way of doing so.

In other words, not only does Dembski offer no way of computing the probability distribution under P(T|H) unless H is extremely limited, thereby precluding any Design inference anyway, he also offers no way of computing the topology of the space of non-Design Hypotheses, and thus no way of systematically eliminating them other than one-by-one, never knowing what proportion of viable hypotheses have been eliminated at any stage. In other words, his is, indeed, an argument from ignorance. Earman’s essay simply does not help him.

Dembski comments:

Suffice it to say, by refusing the eliminative inductions by which specified complexity eliminates chance, one artificially props up chance explanations and (let the irony not be missed) eliminates design explanations whose designing intelligences don’t match up conveniently with a materialistic worldview.

The irony misser here, of course, is Dembski. Nobody qua scientist has “eliminated” a “design explanation”. The problem for Dembski is not that those with a “materialistic worldview” have eliminated Design, but that the only eliminative inductionist approach he cites (Earman’s) would eliminate his Design Hypothesis out of the gate. That’s not because there aren’t perfectly good ways of inferring Design (there are), but because by refusing to make any specific Design-based predictions, Dembski’s hypothesis remains (let the irony not be missed) unfalsifable.

But until he deals with the eleP(T|H)ant, that’s a secondary problem.

Edited for typos and clarity

I would describe the calculation as the probability that a fitness as great as this, or greater could arise as a result of the processes of evolution — including natural selection — which are encompassed in the “chance” hypothesis H. The T is the set of all outcomes that have as high a fitness or higher than the observed fitness.

It has nothing much to do with “surprise” except insofar as a very high fitness is surprising. Dembski has often identified the scale as one of compressibility, but this is not fundamental to his argument, as witness the identification of his quantities with the quantities computed by Hazen and Durston, which are not expressed in terms of compressibility. There is also the issue that if we take compressibility to be fundamental, we find that an organism whose structure is so simple that it could not survive has higher compressibility than one which actually can survive.

And of course, even if we can compute the probability P(T|H), and it is so small that we can conclude in favor of design, there is still at that point nothing to be gained by further expressing this probability as showing that CSI is present. The fundamental argument is made by the lowness of this tail probability, and the CSI part is redundant.

In short, Dembski is hosed.

1) His concept of specification is too narrow, but even if it weren’t,

2) P(T|H) can’t be computed for realistic biological cases, but even if it could,

3) you have to answer the relevant question — “Could this have evolved?” — without the use of CSI, when you calculate P(T|H),

4) so the concept of CSI adds nothing, and if you invoke it the entire argument becomes circular: X couldn’t have evolved, so it must have CSI; X has CSI, therefore it couldn’t have evolved.

Dembski’s “solution” to these problems:

A. Retreat. Renounce the explanatory filter but affirm the value of CSI, as if these were separable concepts.

B. Give up arguing that evolution cannot produce adaptive complexity, without actually admitting that it can.

C. Argue instead that any adaptive complexity produced by evolution was already implicit in the environment, and that a Designer must have placed it there.

Not much of an improvement, but at least he’s fighting a different set of battles.

keiths,

One of the themes of ID/creationism over the years has been to get everyone else – especially the general public – to use ID/creationist concepts and vocabulary. ID/creationist debaters always pulled the debate onto their territory and flooded the discussion with their words and their concepts.

Their entire political push is to get that same set of concepts and vocabulary into the public schools; and get that stuff into the discussions before students have had a chance to learn the real science concepts and vocabulary.

One cannot help noticing just how much time is spent discussing Dembski’s and other ID/creationist papers even though there is nothing of any value in them. In the ID/creationist world, that is considered a success because it allows them to claim that there is an intense scientific discussion going on in science that is being kept from beginning students.

Back in the 1970s, 80s, and 90s, when I was giving talks to the public, I made it a point to never use ID/creationist vocabulary. I discussed the relevant science and the ID/creationist distortions explicitly. My church audiences recognized the political ploys ID/creationists were using; and they did not approve.

If any of this ever came up with my students or my coworkers, I used the misconceptions as a foil for teaching the real concepts. I never took any ID/creationist concepts seriously; and I made it a point early on that I would never debate one of them.

ID/creationists have wanted their concepts and vocabulary to have gravitas and be treated with respect and deference. Exposing them for what they really are highlights the political nature of ID/creationism.

I suspect it is pretty obvious that I have little respect for ID/creationists; but they started the fight.

That’s been a bit exasperating for decades. Scientists started out regarding creation “science” as so obviously silly that they entered debates confident that they’d have no difficulty crushing the creationist with facts, observations, evidence, theories, hypotheses, and the scientific method.

And that arsenal was hopelessly outgunned by such devastating weapons as defining the concepts, framing the discussion, establishing the language and vocabulary, and from that pad launching into a rapid fire assault of misquotes, lies, half-truths, misdirection, equivocation, redirection, and more lies. Even the “debate” format was carefully tilted toward such techniques, rendering careful explanation of even a single point boringly incomprehensible to an audience selected for ignorance of the necessary background.

I think most scientists decided the fight was someone else’s, and chose to ignore it. But even here at TSZ, I notice that what people are saying, over and over ad nauseum, is that ID conclusions cannot be drawn from their evidence. As if that were ever their intent. We see nearly every penny creationists can raise going into PR events, political campaigns, and propaganda. And we keep saying “hey, wait, that’s bad science!” — as though that matters, while the elected McLeroys strive to control the contents of national high school science texts.

And while half the US population, convinced they must choose between evolution and God, have not “converted” to “evolutionism.” Of course, they don’t know what evolution IS, since in much of the country it’s not covered in school. Why anger parents and make administration and teaching harder?

Almost related: http://opinion.financialpost.com/2013/06/10/junk-science-week-unsignificant-statistics/

There are a number of problems with that analysis in the case of the Higgs boson. This Higgs experiment is taking place at two different detectors, the ATLAS and the CMS; each detector using a completely different decay mode for the Higgs. This has been done deliberately in order to account for systematic issues.

Both detectors are reporting results at the same energy and at nearly the same 5 or 6 sigma significance.

Secondly, these results are accumulating as they are being watched. The decision to hold off reporting them until 5 or 6 sigma was because the experimentalists wanted both the time and the data in order to make many crosschecks of their methods of analysis and to be sure that everything is working at the level of sensitivity they need. They are extremely aware of the potential for being wrong, so those other crosschecks are important even though they have not been mentioned explicitly in press reports.

Thirdly, the particle is only tentatively being called the Higgs; physicists are quite aware of the possibility it might be something else. However, the result is consistent with the probabilities of the various decay modes for the Higgs; and two decay modes are being monitored by those two detectors.

Fourthly, the particle needs further study in order to determine its properties such as its spin. That will be done when the LHC is brought back on line for the next phase of operating at higher energy. Many checks of the machine have to take place before ramping up its energy. Further checks of the detectors will also take place in this down time. And there will be many crosschecks of the data in upcoming runs because of the need to determine the particle’s properties.

Fifthly, there are other possible Higgs for which a search will be made; this isn’t the end of the search. Other theories are also being tested at the LHC.

This is not only a difficult experiment, it is a crucial experiment; and it is being done with money paid out by many governments. These experimentalists have learned from the politics of big science that it is deadly to be wrong when that much money and importance ride on the results.

Physicists are light years beyond the sloppy use of statistics Ziliak sees in some areas of testing. They are not as naive as this Stephen Ziliak seems to imply.

The reason why it is important to explain clearly why Dembski’s argument doesn’t work is that there are lots of pro-ID folks who repeatedly, loudly, proudly declare that see, there is this thing called CSI. That we can decide whether CSI is present in a life form without having to know how the life form originated. That CSI can only arise by Design.

They are wrong about that, but the argument is phrased as a technical scientific argument, and so needs to be answered clearly, with good science. And this has to be done repeatedly, since the pro-ID people do not seem often to notice counterarguments.

Dembski may have effectively retreated from much of his argument, but the pro-ID crowd has not noticed that, either.

The other often-repeated claim is that “evolution can’t add information to the genome”.

One thing my CSI demonstration showed is that it can. Unless information produced by evolution doesn’t count 🙂

Dembski, Marks and co. argue that the information is not being created even if it is being put into the genome by natural selection. They argue that the differences in fitness between genotypes contain the information to start with.

However you used the formulation “add information to the genome” which allows for their interpretation. The important issue is not whether information was already lying around out there, but that natural selection can cause it to be in the genome when it wasn’t before.

Your demonstration, and ones I did too (here, in the section on Generating Specified Information, and here) show the information coming to be in the genome. All the objections to those demonstrations were simple attempts to divert the discussion to something else.

OK, point taken.

I still haven’t really got my head around the Search for a Search argument.

The WEASEL example is obviously irrelevant as the optimal solution is encoded in the fitness function. It’s a special case, but Dembski seems to generalise from it to all evolutionary processes.

Part of the problem I think is that the Search + Target metaphor is a really misleading one.

Joe Felsenstein

What I was groping to articulate is the fact that any retrospective calculation would need to include further history – the traits that impress are more than just single runs of mutation-to-fixation. Which renders the calculation nigh impossible. From a given start point, a given set of genotypes is accessible in a given time, and T of those will have equal or greater fitness than a given result. But the serial operation of the ‘chance’ mechanism already eliminated a large part of the total space, by going somewhere else first – most likely somewhere with increased fitness.

Certainly, if you look at it purely in terms of the fitnesses of the various possible genotypes in a single trait of interest, it is very hard even in that narrow view to exclude evolution as a cause for a present high value, given that higher fitness is the very thing that is rewarded with increased representation in the gene pool – NS is a fitness-maximising engine, and probably better at it than any Designer. It often strikes me as a peculiarity of ID that an agent must optimise for the very thing that the environment will do for free.

The refuge must lie in islands – the processes of evolution are in fact so effective that high fitness becomes a trap.

Yes indeed! Thanks!